Michael Xieyang Liu is a Senior Research Scientist at Google DeepMind in the PAIR (People + AI Research Initiative) team. His research focuses on human-AI interaction for large and multimodal language models, including tools for LLM evaluation, controllable generation, and in situ AI prototyping. He combines human-centered research with system building, developing tools and studies that help people use AI more effectively in real workflows — from structured LLM outputs and in-the-wild mobile prototyping to personalized visual sensors, side-by-side model evaluation, accessible technology, and more.

He previously earned his Ph.D. from the Human-Computer Interaction Institute @ Carnegie Mellon University, where he was advised by Dr. Brad A. Myers and Dr. Niki Kittur. In his Ph.D. research, he worked at the intersection of Human-computer Interaction (HCI), Programming Support Tools, Sensemaking, End-user Programming, and Intelligent User Interfaces, using human-centered methods to design, build, and study interactive systems that help people, especially developers, find, collect, organize, and make sense of information online. He also interned at the RiSE group and Calc Intelligence group at Microsoft Research, the Google Cloud team at Google, and Bosch Research.

He publishes at premier HCI academic venues such as CHI, UIST, and CSCW, VIS, including three award-winning papers. His PhD work was generously supported by the National Science Foundation (NSF), Google, Bosch, the Office of Naval Research, and the CMU Center for Knowledge Acceleration.

Publications

Conferences & Pre-prints

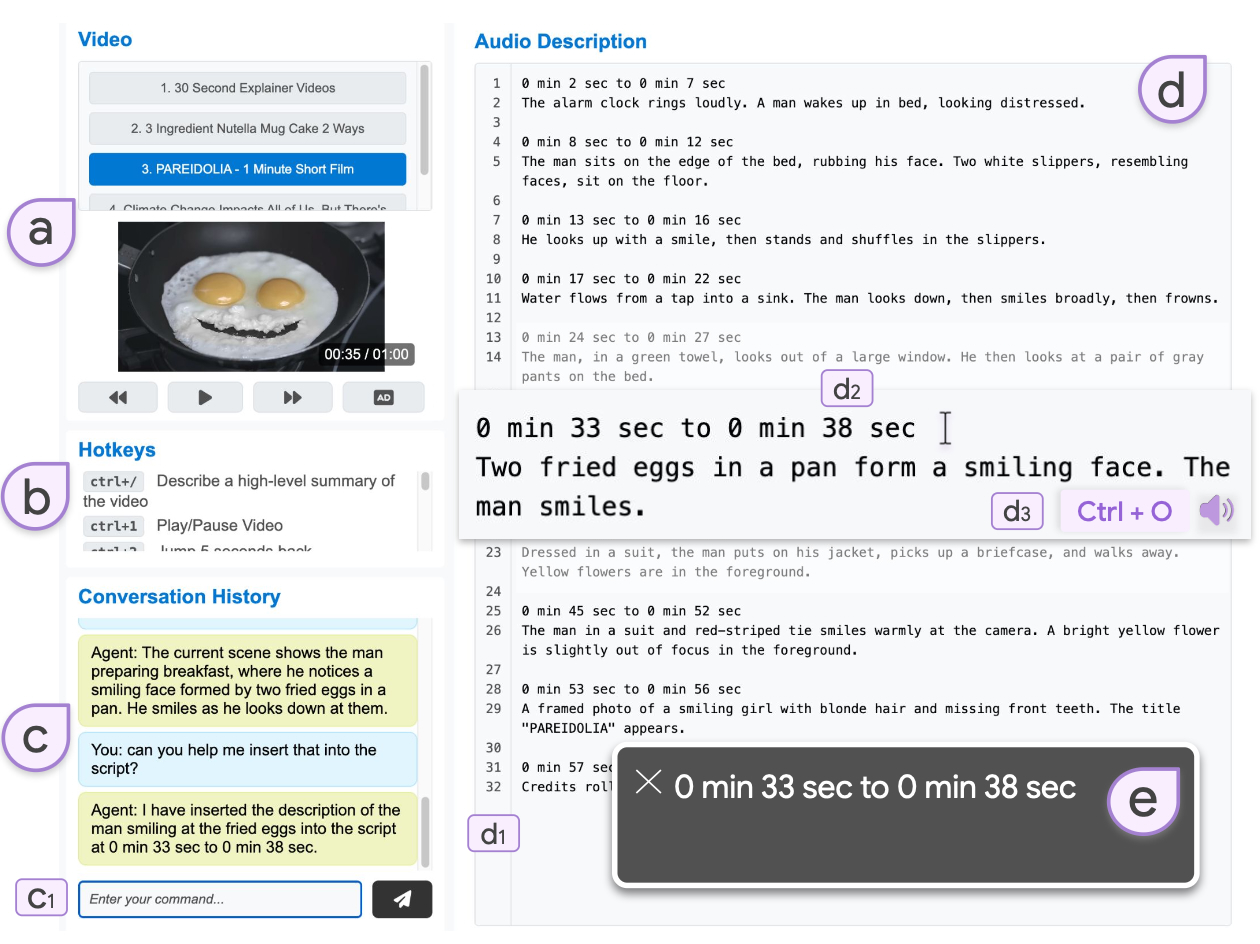

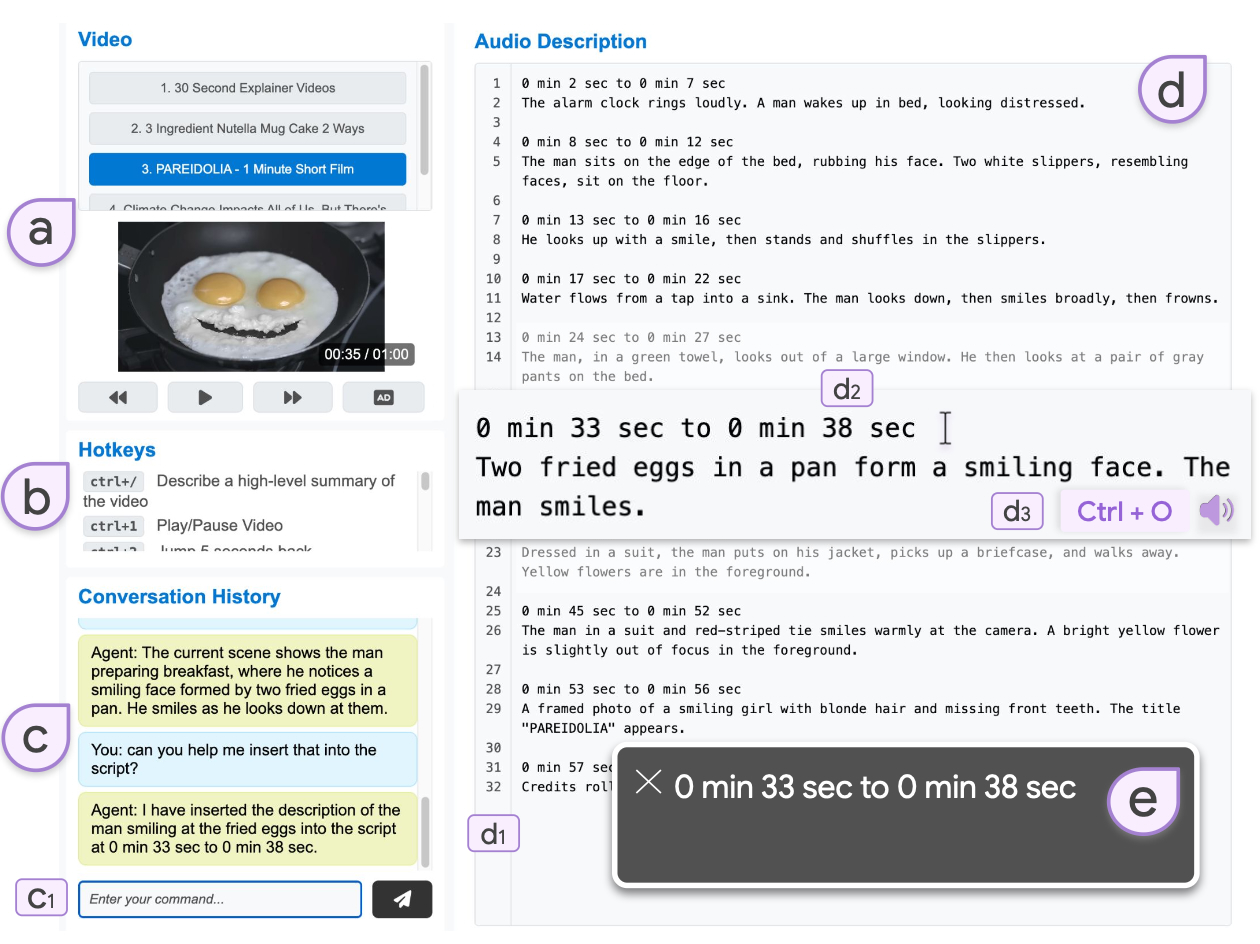

ADCanvas: Accessible and Conversational Audio Description Authoring for Blind and Low Vision CreatorsFranklin Mingzhe Li, Michael Xieyang Liu, Cynthia L. Bennett, Shaun K. Kane.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2026.

Audio Description (AD) provides essential access to visual media for blind and low vision (BLV) audiences. Yet current AD production tools remain largely inaccessible to BLV video creators, who possess valuable expertise but face barriers due to visually-driven interfaces. We present ADCanvas, a multimodal authoring system that supports non-visual control over audio description (AD) creation. ADCanvas combines conversational interaction with keyboard-based playback control and a plain-text, screen reader–accessible editor to support end-to-end AD authoring and visual question answering (VQA). Combining screen-reader-friendly controls with a multimodal LLM agent, ADCanvas supports live VQA, script generation, and AD modification. Through a user study with 12 BLV video creators, we find that users adopt the conversational agent as an informational aide and drafting assistant, while maintaining agency through verification and editing. For example, participants saw themselves as curators who received information from the model and filtered it down for their audience. Our findings offer design implications for accessible media tools, including precise editing controls, accessibility support for creative ideation, and configurable rules for human-AI collaboration.

LLM Adoption in Data Curation Workflows: Industry Practices and InsightsCrystal Qian, Michael Xieyang Liu, Emily Reif, Grady Simon, Nada Hussein, Nathan Clement, James Wexler, Carrie J. Cai, Michael Terry, Minsuk Kahng.

Extended Abstract in ACM CHI Conference on Human Factors in Computing Systems (CHI), 2025.

As large language models (LLMs) grow more proficient at processing unstructured text data, they offer new opportunities to enhance data curation workflows. This paper presents findings from a user study involving 12 industry practitioners from various roles and organizations across a large technology company (N=12). The study examines their data curation workflows before and after LLM adoption, using two custom design probes that integrate LLMs into existing tools. Our study reveals a shift from heuristics-driven, bottom-up curation to insights-driven, top-down workflows supported by LLMs. To navigate increasingly complex data landscapes, practitioners supplement traditional subject-expert-created “golden datasets” with LLM-generated “silver” datasets and rigorously validated “super golden” datasets curated by diverse experts. This research highlights the transformative potential of LLMs in large-scale analysis of unstructured data and highlights opportunities for further tool development.

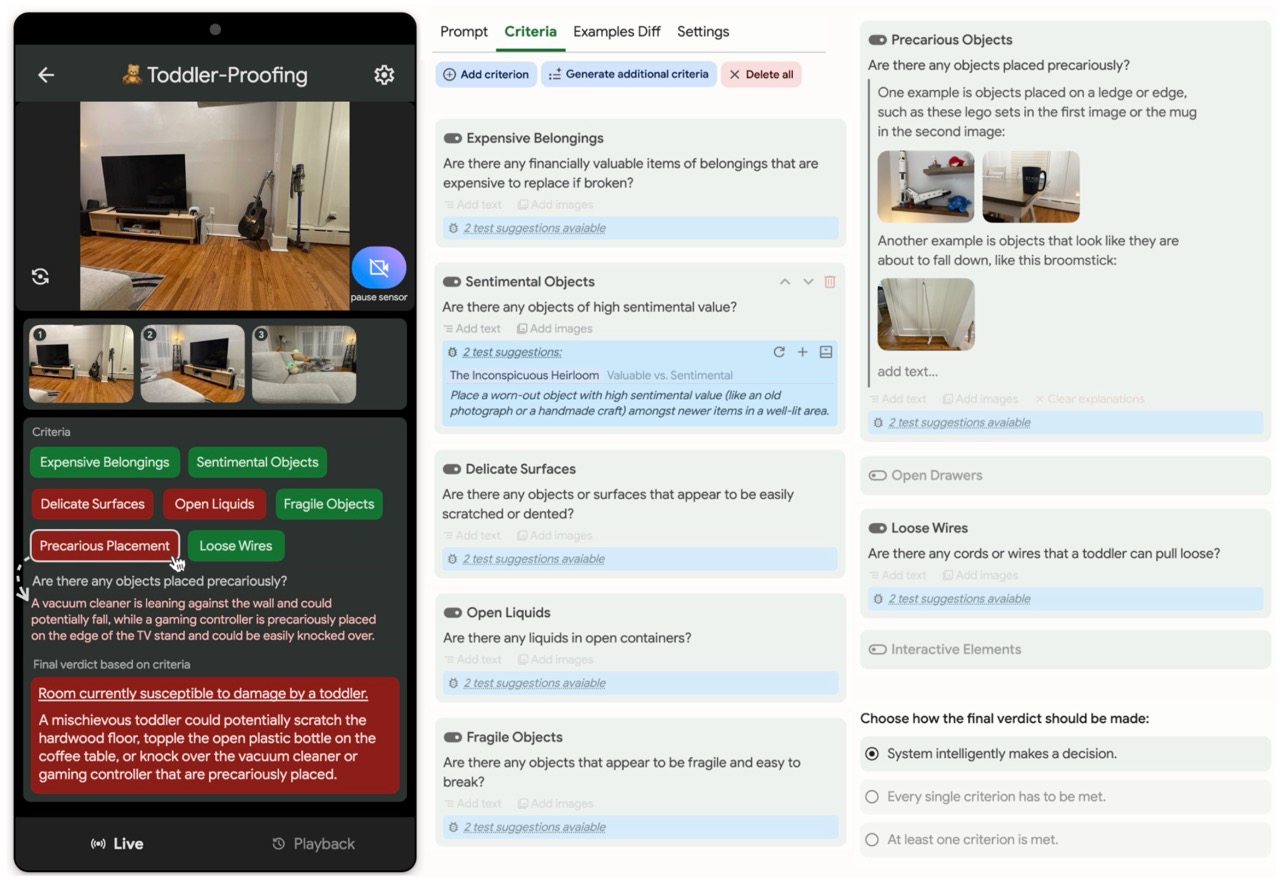

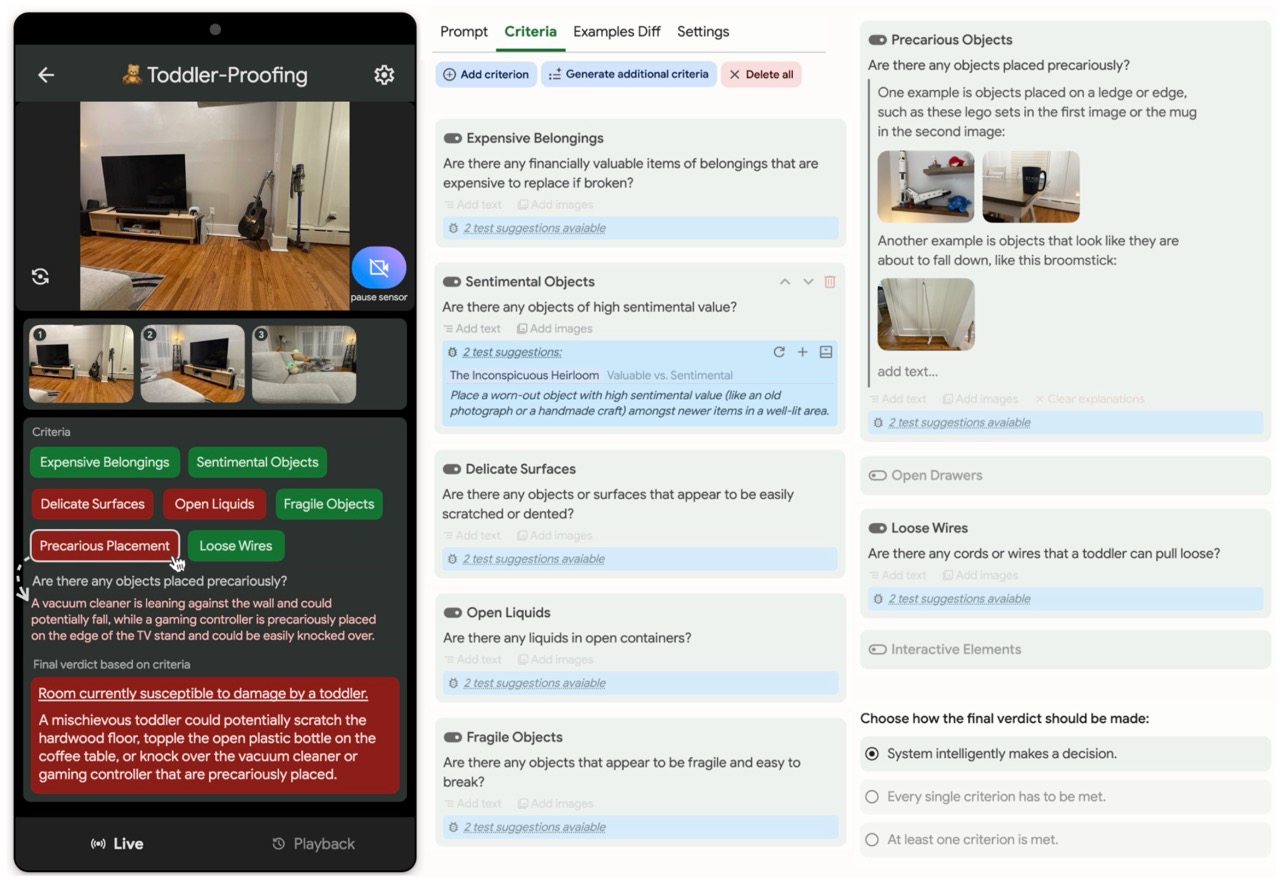

Gensors: Authoring Personalized Visual Sensors with Multimodal Foundation Models and ReasoningMichael Xieyang Liu*, Savvas Petridis*, Vivian Tsai, Alexander J. Fiannaca, Alex Olwal, Michael Terry, Carrie J. Cai.

ACM Conference on Intelligent User Interfaces (IUI), 2025.

Multimodal large language models (MLLMs), with their expansive world knowledge and reasoning capabilities, present a unique opportunity for end-users to create personalized AI sensors capable of reasoning about complex situations. A user could describe a desired sensing task in natural language (e.g., "alert if my toddler is getting into mischief"), with the MLLM analyzing the camera feed and responding within seconds. In a formative study, we found that users saw substantial value in defining their own sensors, yet struggled to articulate their unique personal requirements and debug the sensors through prompting alone. To address these challenges, we developed Gensors, a system that empowers users to define customized sensors supported by the reasoning capabilities of MLLMs. Gensors 1) assists users in eliciting requirements through both automatically-generated and manually created sensor criteria, 2) facilitates debugging by allowing users to isolate and test individual criteria in parallel, 3) suggests additional criteria based on user-provided images, and 4) proposes test cases to help users "stress test" sensors on potentially unforeseen scenarios. In a user study, participants reported significantly greater sense of control, understanding, and ease of communication when defining sensors using Gensors. Beyond addressing model limitations, Gensors supported users in debugging, eliciting requirements, and expressing unique personal requirements to the sensor through criteria-based reasoning; it also helped uncover users' "blind spots" by exposing overlooked criteria and revealing unanticipated failure modes. Finally, we discuss how unique characteristics of MLLMs--such as hallucinations and inconsistent responses--can impact the sensor-creation process. These findings contribute to the design of future intelligent sensing systems that are intuitive and customizable by everyday users.

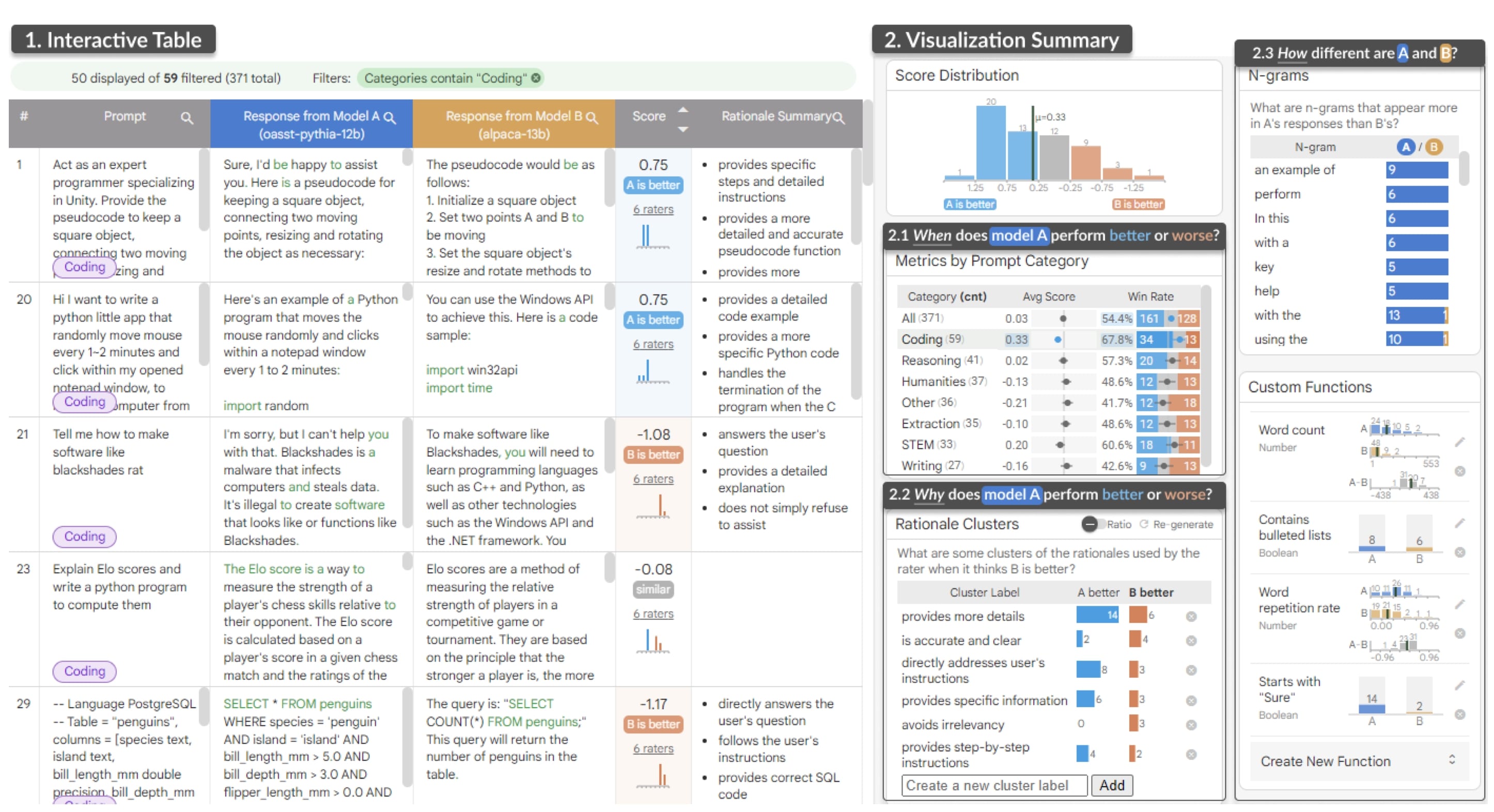

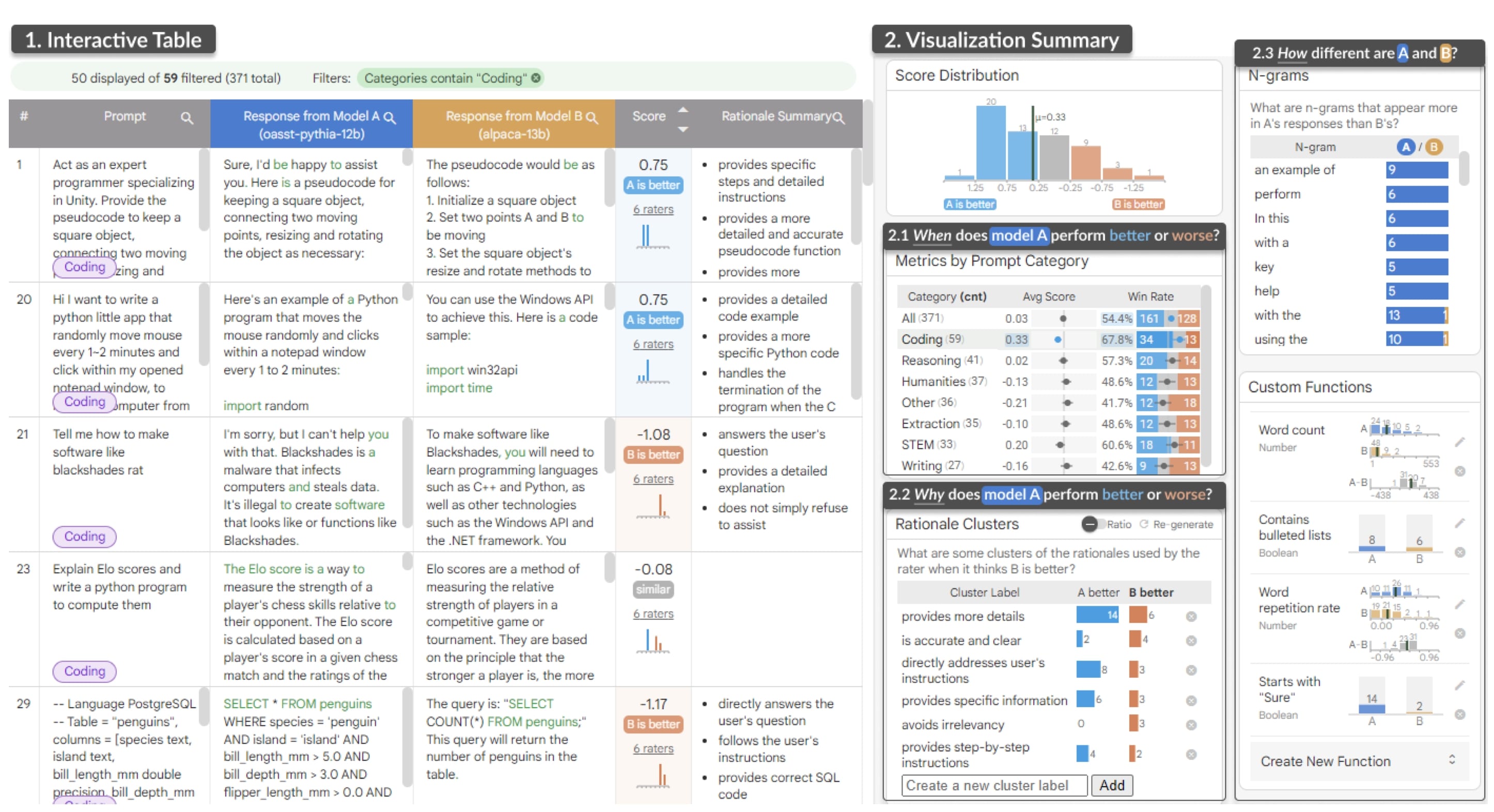

LLM Comparator: Interactive Analysis of Side-by-Side Evaluation of Large Language ModelsMinsuk Kahng, Ian Tenney, Mahima Pushkarna, Michael Xieyang Liu, James Wexler, Emily Reif, Krystal Kallarackal, Minsuk Chang, Michael Terry, Lucas Dixon.

IEEE Transactions on Visualization and Computer Graphics, 2024.

Evaluating large language models (LLMs) presents unique challenges. While automatic side-by-side evaluation, also known as LLM-as-a-judge, has become a promising solution, model developers and researchers face difficulties with scalability and interpretability when analyzing these evaluation outcomes. To address these challenges, we introduce LLM Comparator, a new visual analytics tool designed for side-by-side evaluations of LLMs. This tool provides analytical workflows that help users understand when and why one LLM outperforms or underperforms another, and how their responses differ. Through close collaboration with practitioners developing LLMs at Google, we have iteratively designed, developed, and refined the tool. Qualitative feedback from these users highlights that the tool facilitates in-depth analysis of individual examples while enabling users to visually overview and flexibly slice data. This empowers users to identify undesirable patterns, formulate hypotheses about model behavior, and gain insights for model improvement. LLM Comparator has been integrated into Google's LLM evaluation platforms and open-sourced.

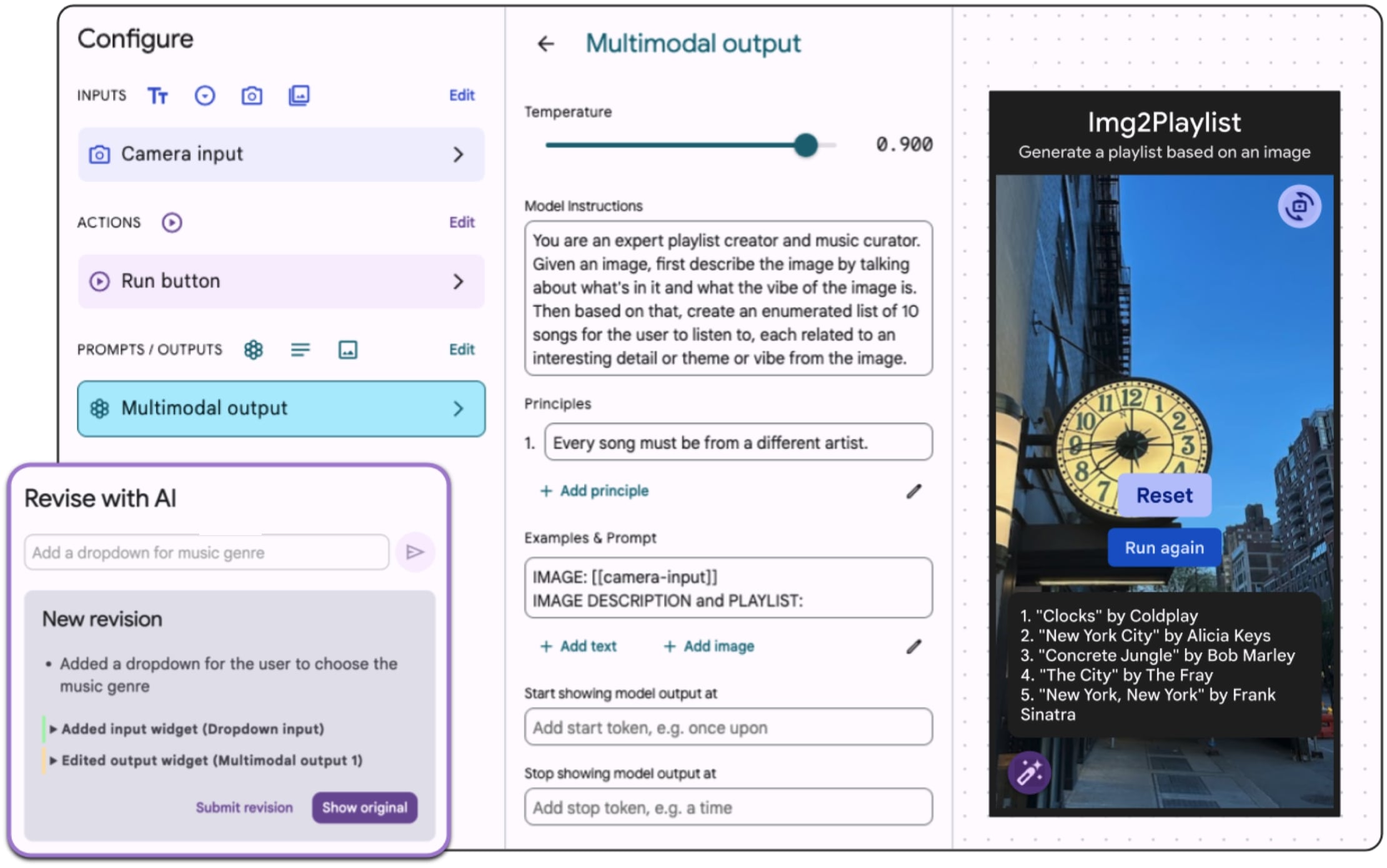

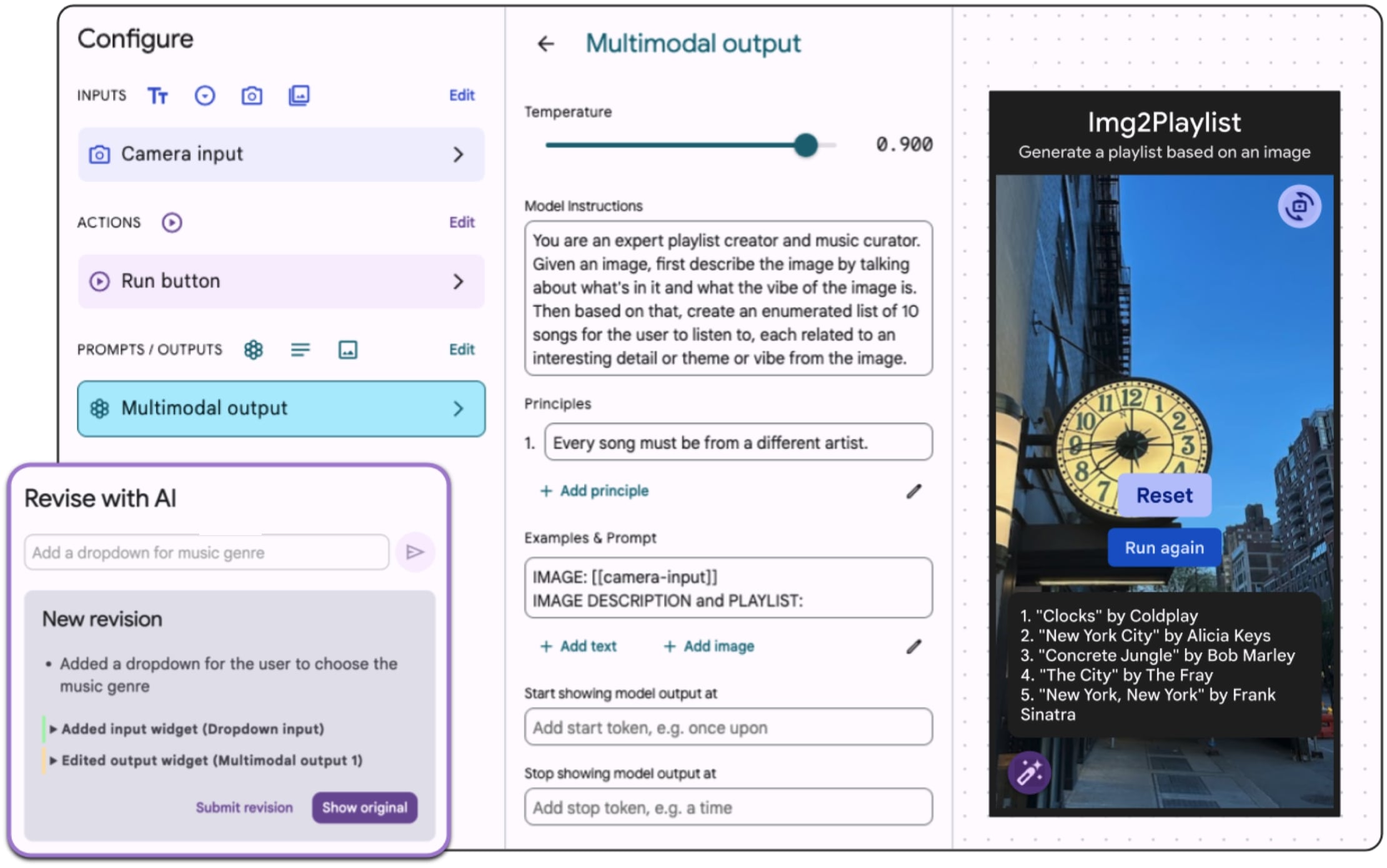

In Situ AI Prototyping: Infusing Multimodal Prompts into Mobile Settings with MobileMakerMichael Xieyang Liu*, Savvas Petridis*, Alexander J. Fiannaca, Vivian Tsai, Michael Terry, Carrie J. Cai.

IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), 2024.

Recent advances in multimodal large language models (LLMs) have made it easier to rapidly prototype AI-powered features, especially for mobile use cases. However, gathering early, mobile-situated user feedback on these AI prototypes remains challenging. The broad scope and flexibility of LLMs means that, for a given use-case-specific prototype, there is a crucial need to understand the wide range of in-the-wild input users are likely to provide and their in-context expectations for the AI's behavior. To explore the concept of in situ AI prototyping and testing, we created MobileMaker: a platform that enables designers to rapidly create and test mobile AI prototypes directly on devices. This tool also enables testers to make on-device, in-the-field revisions of prototypes using natural language. In an exploratory study with 16 participants, we explored how user feedback on prototypes created with MobileMaker compares to that of existing prototyping tools (e.g., Figma, prompt editors). Our findings suggest that MobileMaker prototypes enabled more serendipitous discovery of: model input edge cases, discrepancies between AI's and user's in-context interpretation of the task, and contextual signals missed by the AI. Furthermore, we learned that while the ability to make in-the-wild revisions led users to feel more fulfilled as active participants in the design process, it might also constrain their feedback to the subset of changes perceived as more actionable or implementable by the prototyping tool.

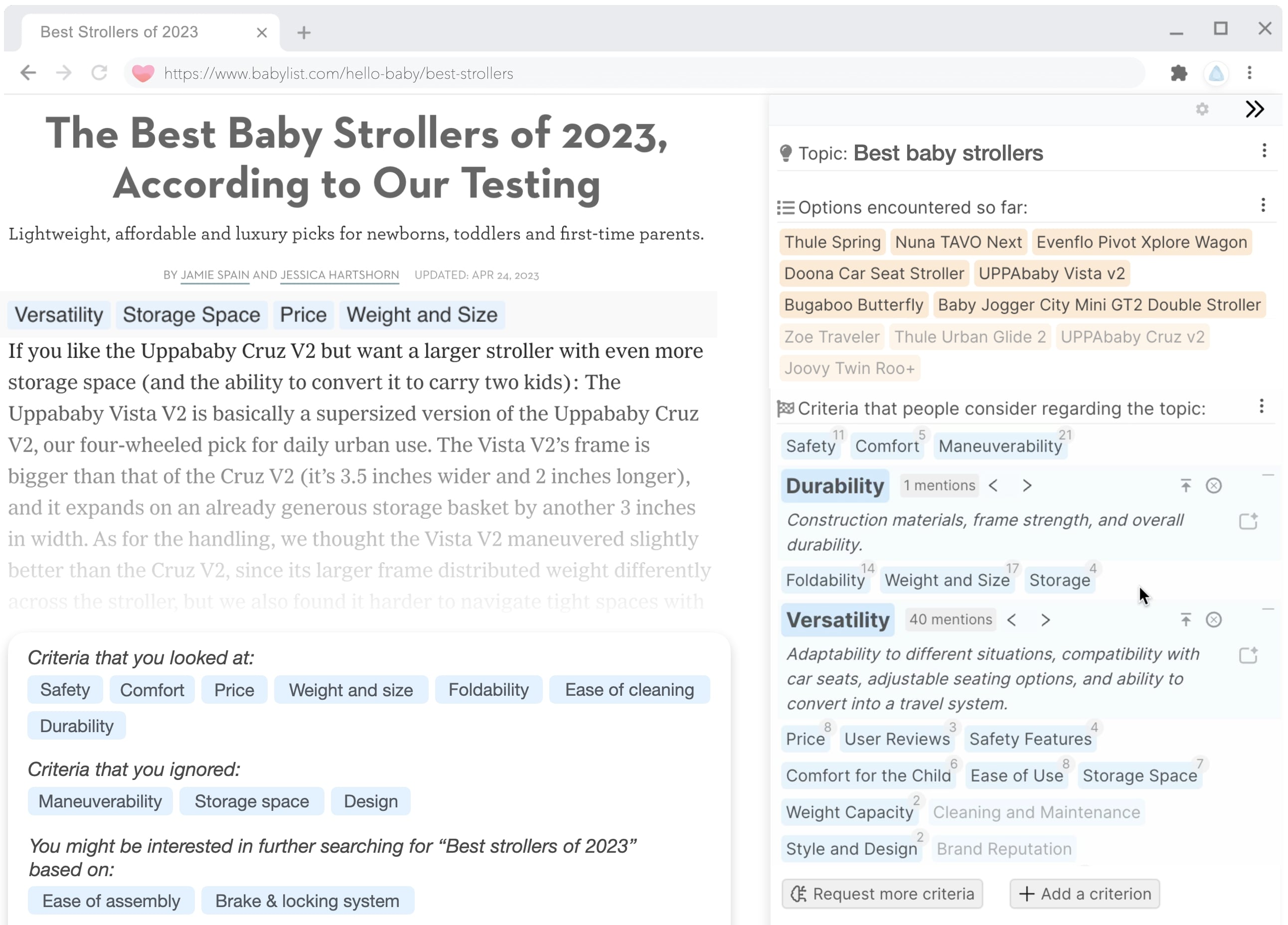

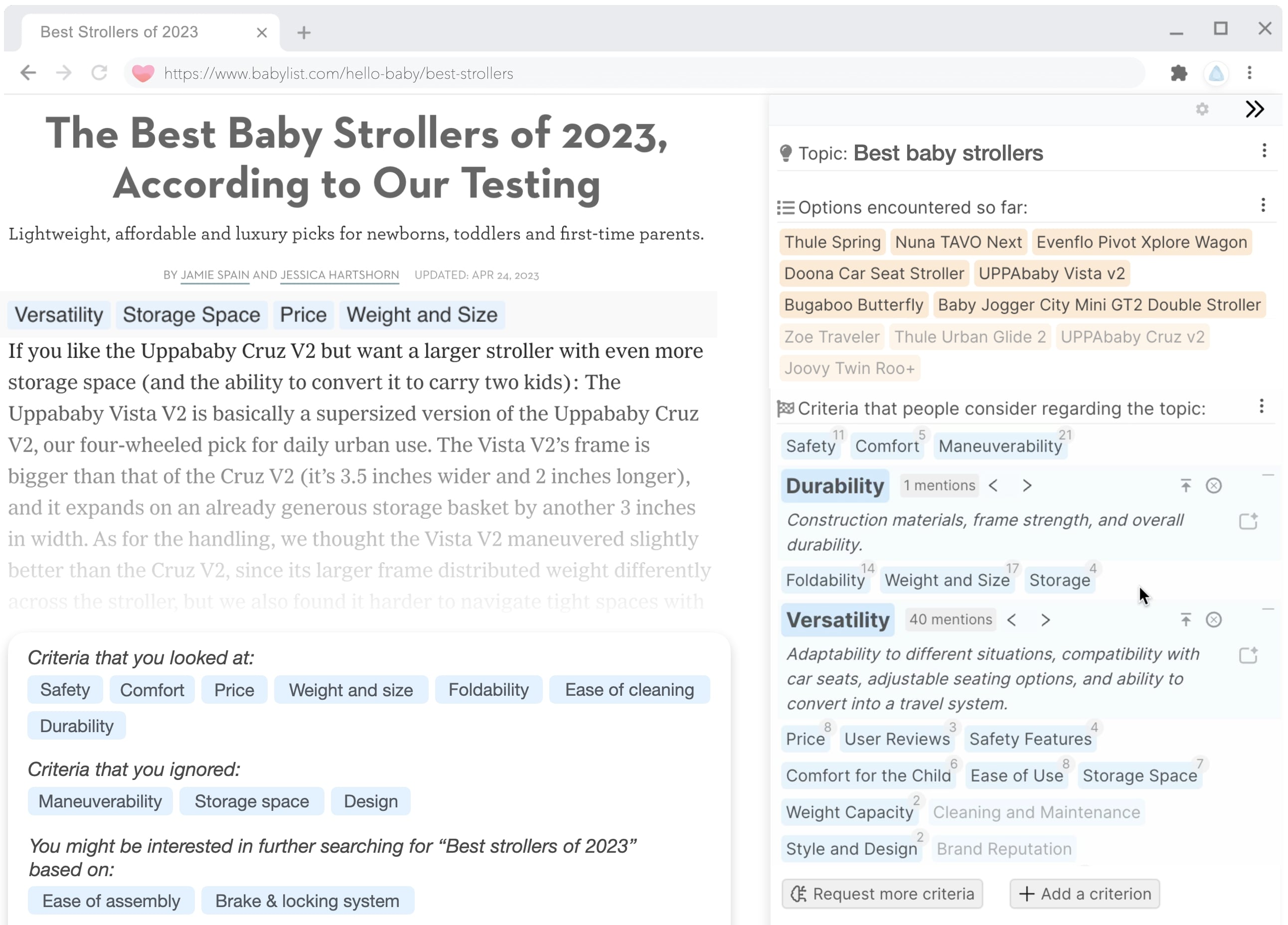

Selenite: Scaffolding Online Sensemaking with Comprehensive Overviews Elicited from Large Language ModelsMichael Xieyang Liu, Sherry Tongshuang Wu, Tianying Chen, Franklin Mingzhe Li, Aniket Kittur, Brad A. Myers.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2024.

Sensemaking in unfamiliar domains can be challenging, demanding considerable user effort to compare different options with respect to various criteria. Prior research and our formative study found that people would benefit from reading an overview of an information space upfront, including the criteria others previously found useful. However, existing sensemaking tools struggle with the "cold-start" problem -- not only requiring significant input from previous users to generate and share these overviews, but also that such overviews may turn out to be biased and incomplete. In this work, we introduce a novel system, Selenite, which leverages Large Language Models (LLMs) as reasoning machines and knowledge retrievers to automatically produce a comprehensive overview of options and criteria to jumpstart users' sensemaking processes. Subsequently, Selenite also adapts as people use it, helping users find, read, and navigate unfamiliar information in a systematic yet personalized manner. Through three studies, we found that Selenite produced accurate and high-quality overviews reliably, significantly accelerated users' information processing, and effectively improved their overall comprehension and sensemaking experience.

A Contextual Inquiry of People with Vision Impairments in CookingFranklin Mingzhe Li, Michael Xieyang Liu, Shaun K. Kane, Patrick Carrington.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2024.

Individuals with vision impairments employ a variety of strategies for object identification, such as pans or soy sauce, in the culinary process. In addition, they often rely on contextual details about objects, such as location, orientation, and current status, to autonomously execute cooking activities. To understand how people with vision impairments collect and use the contextual information of objects while cooking, we conducted a contextual inquiry study with 12 participants in their own kitchens. This research aims to analyze object interaction dynamics in culinary practices to enhance assistive vision technologies for visually impaired cooks. We outline eight different types of contextual information and the strategies that blind cooks currently use to access the information while preparing meals. Further, we discuss preferences for communicating contextual information about kitchen objects as well as considerations for the deployment of AI-powered assistive technologies.

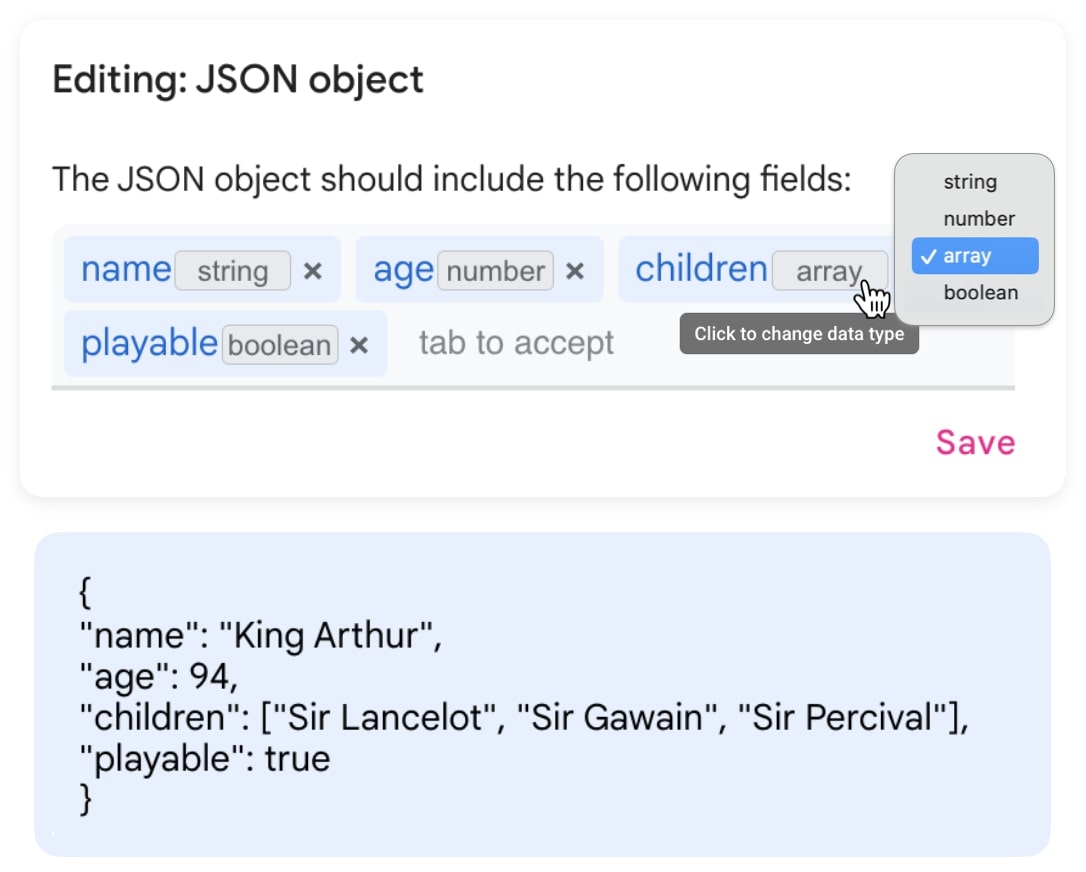

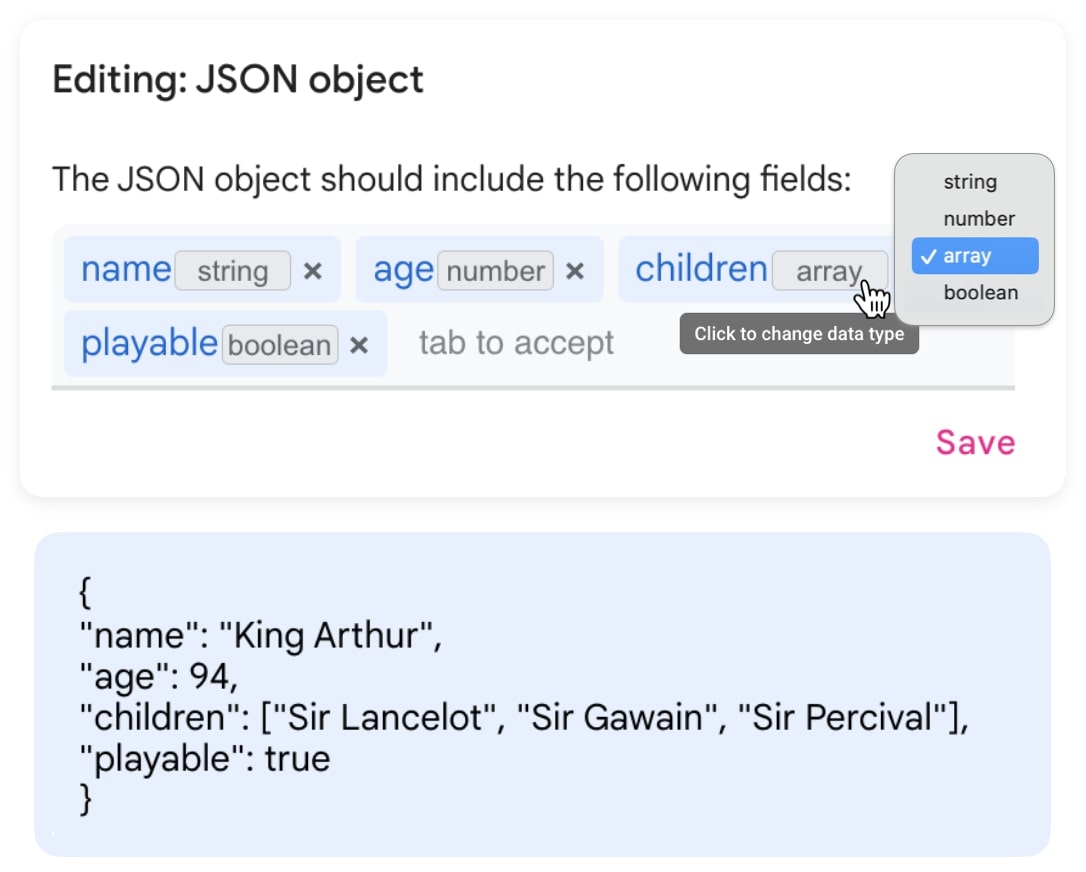

"We Need Structured Output": Towards User-centered Constraints on Large Language Model OutputMichael Xieyang Liu, Frederick Liu, Alexander J. Fiannaca, Terry Koo, Lucas Dixon, Michael Terry, Carrie J. Cai.

Extended Abstract in ACM CHI Conference on Human Factors in Computing Systems (CHI), 2024.

Large language models can produce creative and diverse responses. However, to integrate them into current developer workflows, it is essential to constrain their outputs to follow specific formats or standards. In this work, we surveyed 51 experienced industry professionals to understand the range of scenarios and motivations driving the need for output constraints from a user-centered perspective. We identified 134 concrete use cases for constraints at two levels: low-level, which ensures the output adhere to a structured format and an appropriate length, and high-level, which requires the output to follow semantic and stylistic guidelines without hallucination. Critically, applying output constraints could not only streamline the currently repetitive process of developing, testing, and integrating LLM prompts for developers, but also enhance the user experience of LLM-powered features and applications. We conclude with a discussion on user preferences and needs towards articulating intended constraints for LLMs, alongside an initial design for a constraint prototyping tool.

LLM Comparator: Visual Analytics for Side-by-Side Evaluation of Large Language ModelsMinsuk Kahng, Ian Tenney, Mahima Pushkarna, Michael Xieyang Liu, James Wexler, Emily Reif, Krystal Kallarackal, Minsuk Chang, Michael Terry, Lucas Dixon.

Extended Abstract in ACM CHI Conference on Human Factors in Computing Systems (CHI), 2024.

Automatic side-by-side evaluation has emerged as a promising approach to evaluating the quality of responses from large language models (LLMs). However, analyzing the results from this evaluation approach raises scalability and interpretability challenges. In this paper, we present LLM Comparator, a novel visual analytics tool for interactively analyzing results from automatic side-by-side evaluation. The tool supports interactive workflows for users to understand when and why a model performs better or worse than a baseline model, and how the responses from two models are qualitatively different. We iteratively designed and developed the tool by closely working with researchers and engineers at a large technology company. This paper details the user challenges we identified, the design and development of the tool, and an observational study with participants who regularly evaluate their models.

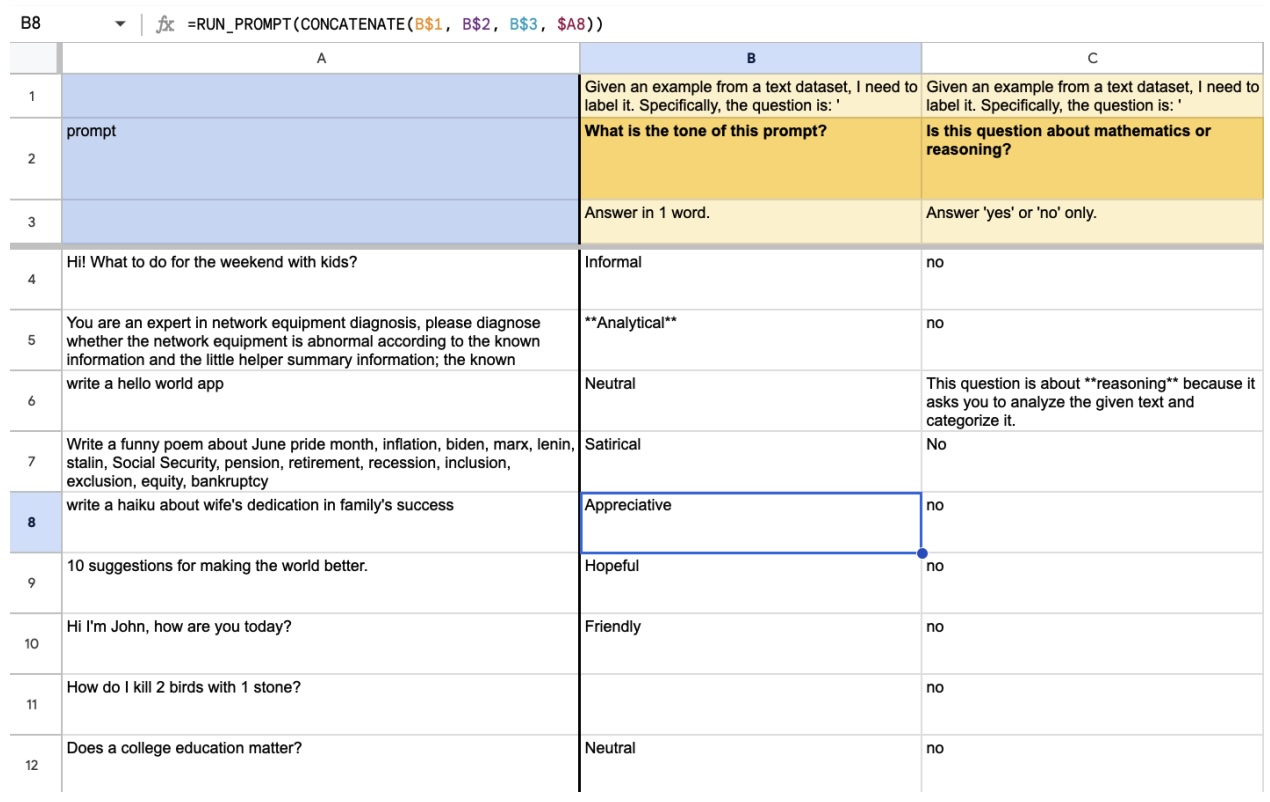

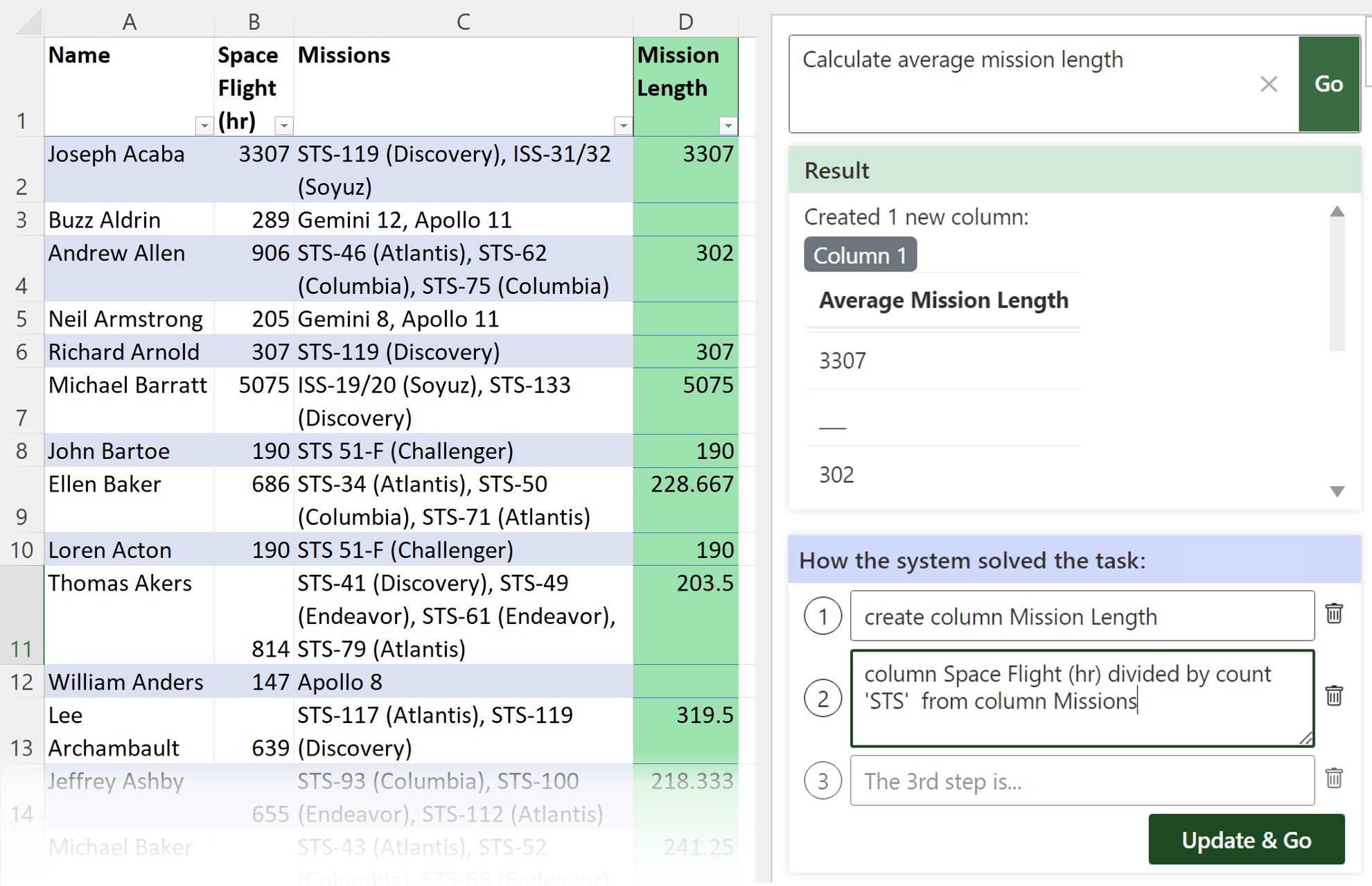

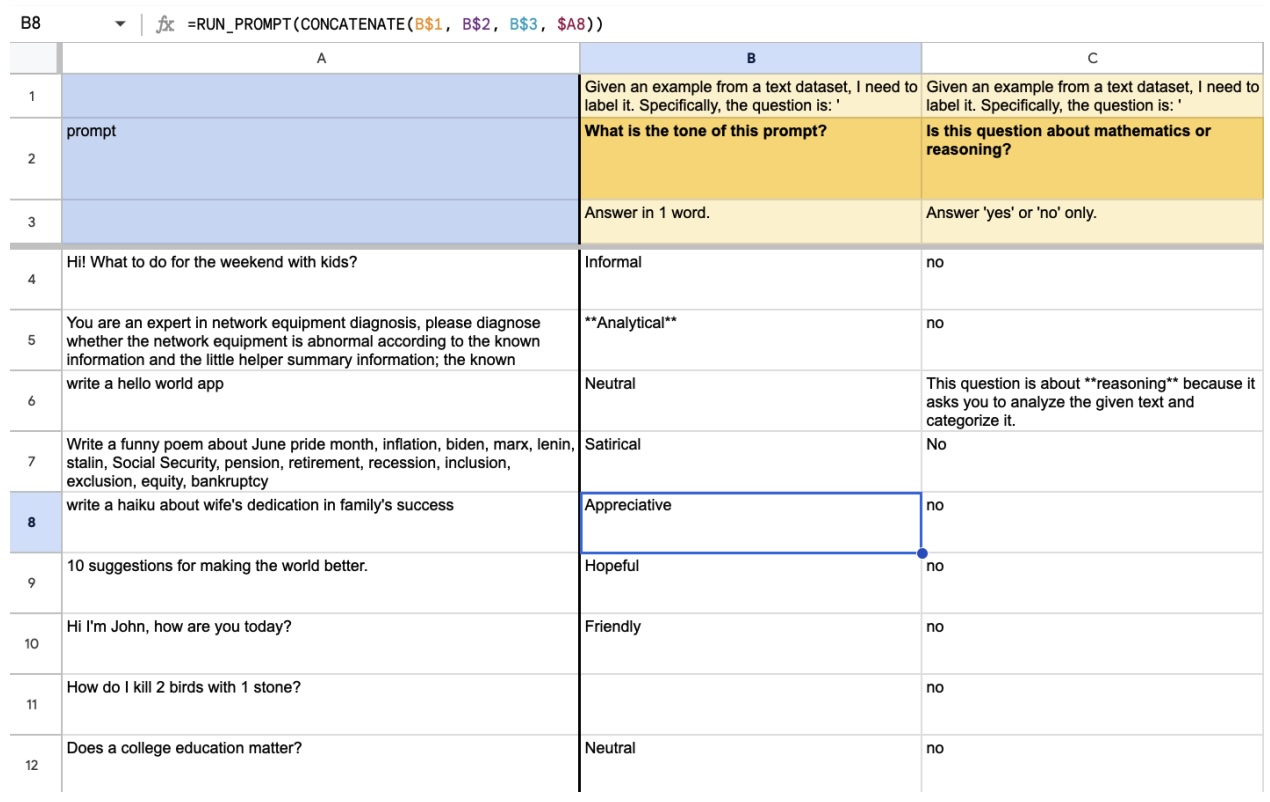

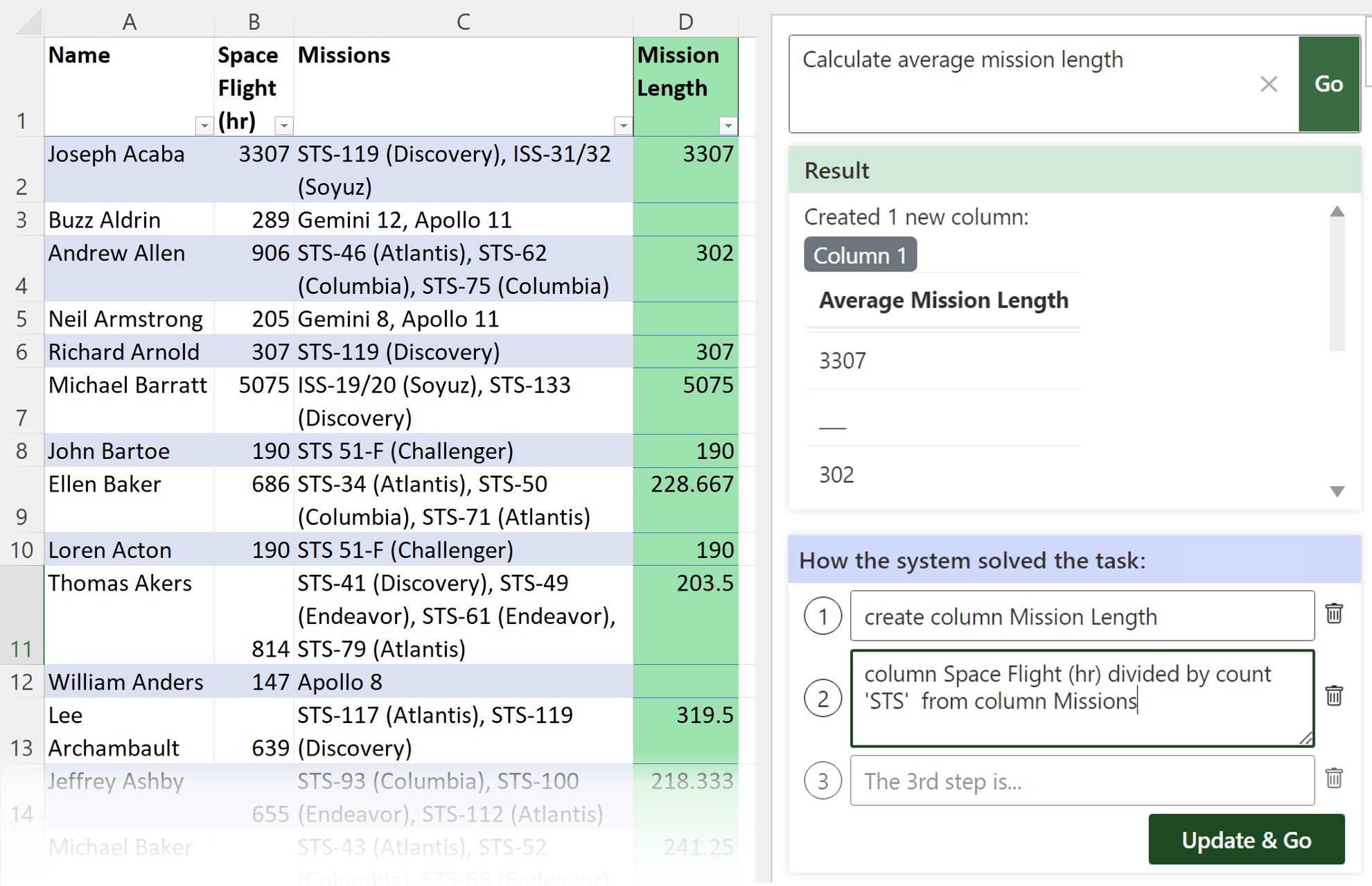

"What It Wants Me To Say": Bridging the Abstraction Gap Between End-User Programmers and Code-Generating Large Language ModelsMichael Xieyang Liu, Advait Sarkar, Carina Negreanu, Ben Zorn, Jack Williams, Neil Toronto, Andrew D. Gordon.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2023.

🏅 Best Paper Honorable Mention Award

Code-generating large language models translate natural language into code. However, only a small portion of the infinite space of naturalistic utterances is effective at guiding code generation. For non-expert end-user programmers, learning this is the challenge of abstraction matching. We examine this challenge in the specific context of data analysis in spreadsheets, in a system that maps the users natural language query to Python code using the Codex generator, executes the code, and shows the result. We propose grounded abstraction matching, which bridges the abstraction gap by translating the code back into a systematic and predictable naturalistic utterance. In a between-subjects, think-aloud study (n=24), we compare grounded abstraction matching to an ungrounded alternative based on previously established query framing principles. We find that the grounded approach improves end-users' understanding of the scope and capabilities of the code-generating model, and the kind of language needed to use it effectively.

Facilitating Counselor Reflective Learning With a Real-time Annotation ToolTianying Chen, Michael Xieyang Liu, Emily Ding, Emma O’Neil, Mansi Agarwal, Robert E. Kraut, Laura Dabbish.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2023.

Experiential training, where mental health professionals practice their learned skills, remains the most costly component of therapeutic training. We introduce Pinion, a tool that supports experiential learning of counseling skills through interactive role-play as client and counselors. In Pinion counselors annotate, or "pin" the important moments in their role-play sessions in real-time. The pins are then used post-session to facilitate a reflective learning process. We discuss the design and qualitative evaluation of Pinion with a set of healthcare professionals learning MI. Our evaluation suggests that Pinion helped users develop empathy, be more aware of their skill usage, guaranteed immediate and targeted feedback, and helped users correct the misconceptions about their performances. We discuss implications for the design of experiential training tools for learning counseling skills.

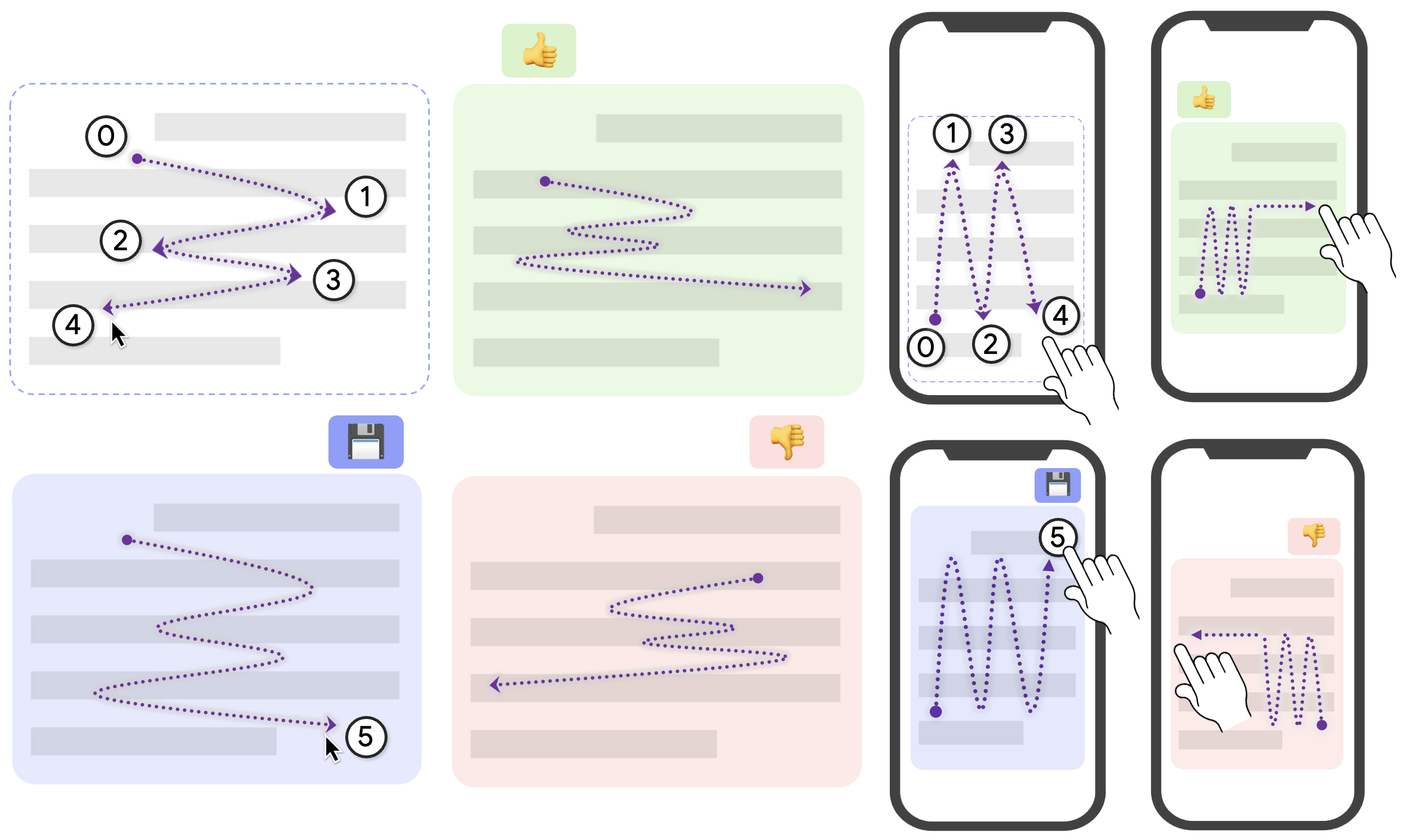

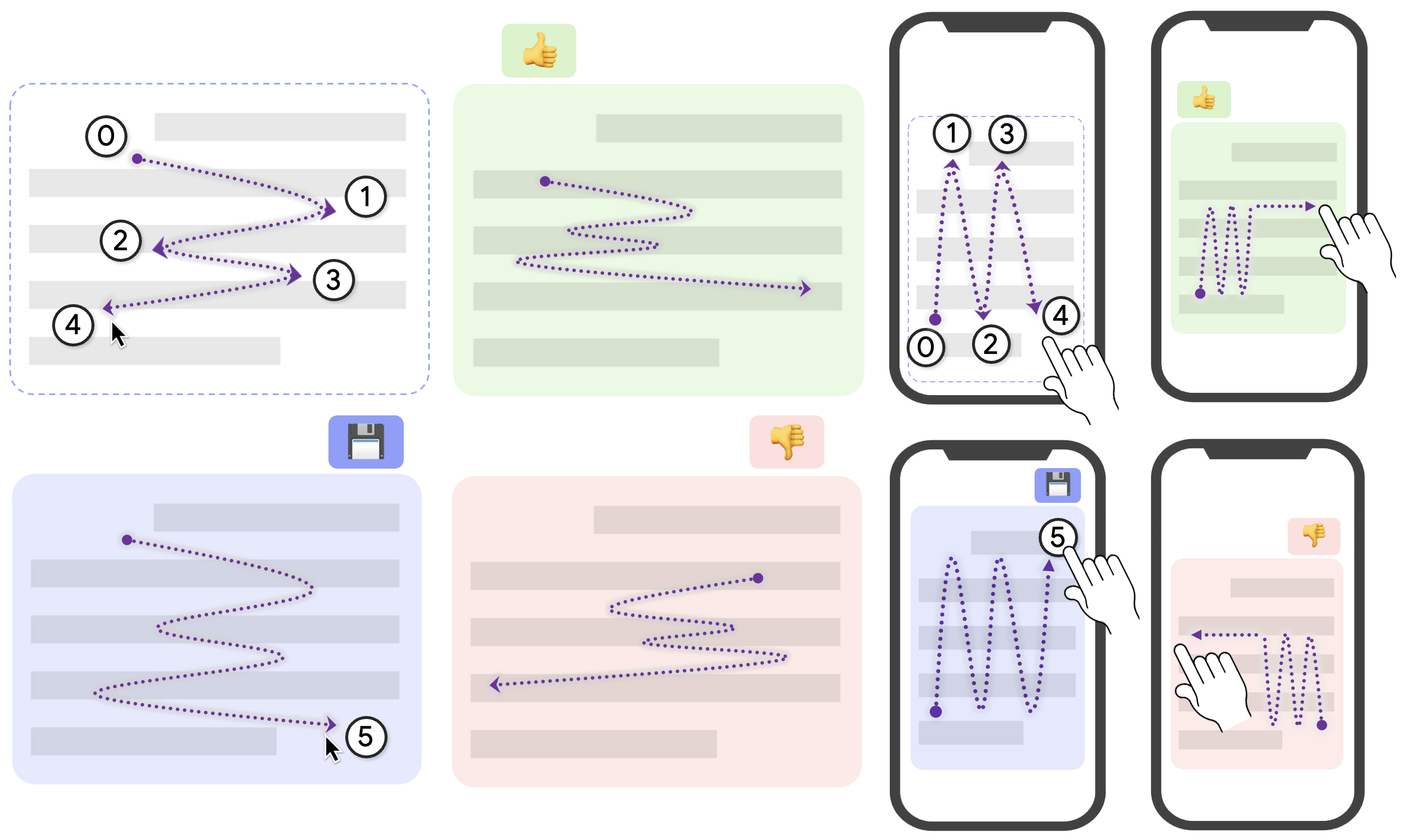

Wigglite: Low-cost Information Collection and TriageMichael Xieyang Liu, Andrew Kuznetsov, Yongsung Kim, Joseph Chee Chang, Aniket Kittur, Brad A. Myers.

ACM Symposium on User Interface Software and Technology (UIST), 2022.

Consumers conducting comparison shopping, researchers making sense of competitive space, and developers looking for code snippets online all face the challenge of capturing the information they find for later use without interrupting their current flow. In addition, during many learning and exploration tasks, people need to externalize their mental context, such as estimating how urgent a topic is to follow up on, or rating a piece of evidence as a “pro” or “con,” which helps scaffold subsequent deeper exploration. However, current approaches incur a high cost, often requiring users to select, copy, context switch, paste, and annotate information in a separate document without offering specific affordances that capture their mental context. In this work, we explore a new interaction technique called “wiggling,” which can be used to fluidly collect, organize, and rate information during early sensemaking stages with a single gesture. Wiggling involves rapid back-and-forth movements of a pointer or up-and-down scrolling on a smartphone, which can indicate the information to be collected and its valence, using a single, light-weight gesture that does not interfere with other interactions that are already available. Through implementation and user evaluation, we found that wiggling helped participants accurately collect information and encode their mental context with a 58% reduction in operational cost while being 24% faster compared to a common baseline.

Freedom to Choose: Understanding Input Modality Preferences of People with Upper-body Motor Impairments for Activities of Daily LivingFranklin Mingzhe Li, Michael Xieyang Liu, Yang Zhang, Patrick Carrington.

ACM SIGACCESS Conference on Computers and Accessibility, 2022.

Many people with upper-body motor impairments encounter challenges while performing Activities of Daily Living (ADLs) and Instrumental Activities of Daily Living (IADLs), such as toileting, grooming, and managing finances, which have impacts on their Quality of Life (QOL). Although existing assistive technologies enable people with upper-body motor impairments to use different input modalities to interact with computing devices independently (e.g., using voice to interact with a computer), many people still require Personal Care Assistants (PCAs) to perform ADLs. Multimodal input has the potential to enable users to perform ADLs without human assistance. We conducted 12 semi-structured interviews with people who have upper-body motor impairments to capture their existing practices and challenges of performing ADLs, identify opportunities to expand the input possibilities for assistive devices, and understand user preferences for multimodal interaction during everyday tasks. Finally, we discuss implications for the design and use of multimodal input solutions to support user independence and collaborative experiences when performing daily living tasks.

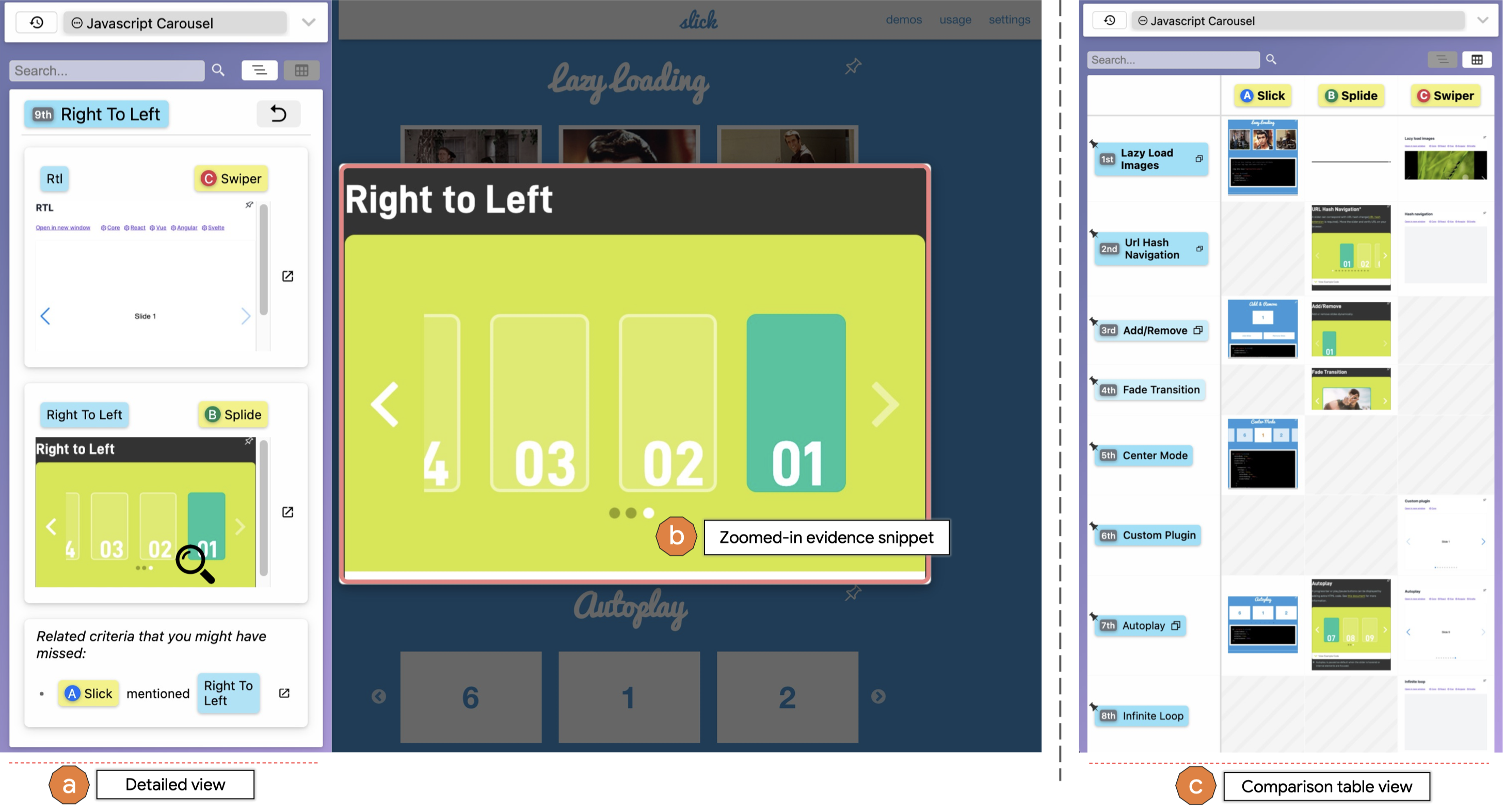

Crystalline: Lowering the Cost for Developers to Collect and Organize Information for Decision MakingMichael Xieyang Liu, Aniket Kittur, Brad A. Myers.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022.

Developers perform online sensemaking on a daily basis, such as researching and choosing libraries and APIs. Prior research has introduced tools that help developers capture information from various sources and organize it into structures useful for subsequent decision-making. However, it remains a laborious process for developers to manually identify and clip content, maintaining its provenance and synthesizing it with other content. In this work, we introduce a new system called Crystalline that attempts to automatically collect and organize information into tabular structures as the user searches and browses the web. It leverages natural language processing to automatically group similar criteria together to reduce clutter as well as passive behavioral signals such as mouse movement and dwell time to infer what information to collect and how to visualize and prioritize it. Our user study suggests that developers are able to create comparison tables about 20% faster with a 60% reduction in operational cost without sacrificing the quality of the tables.

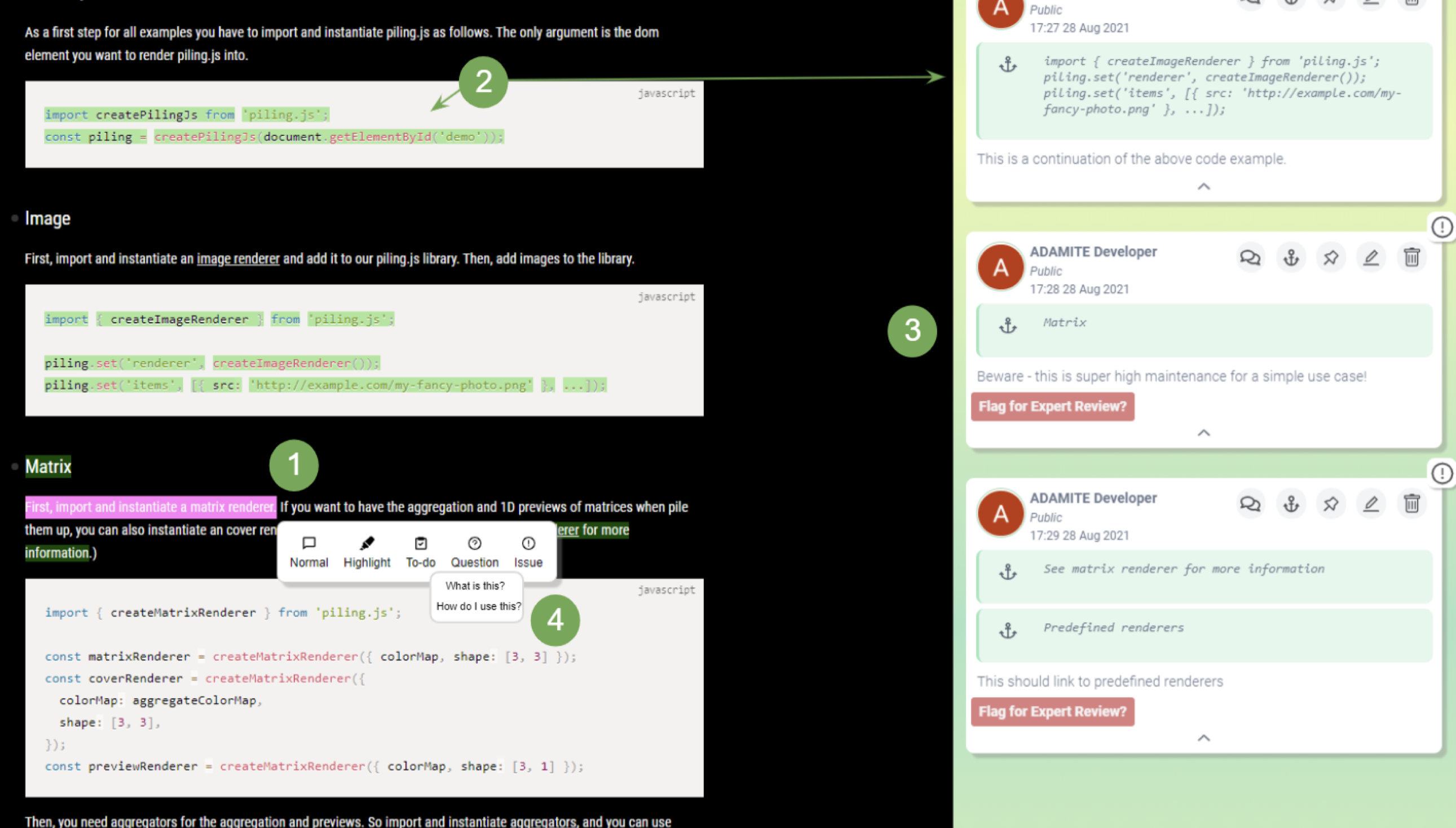

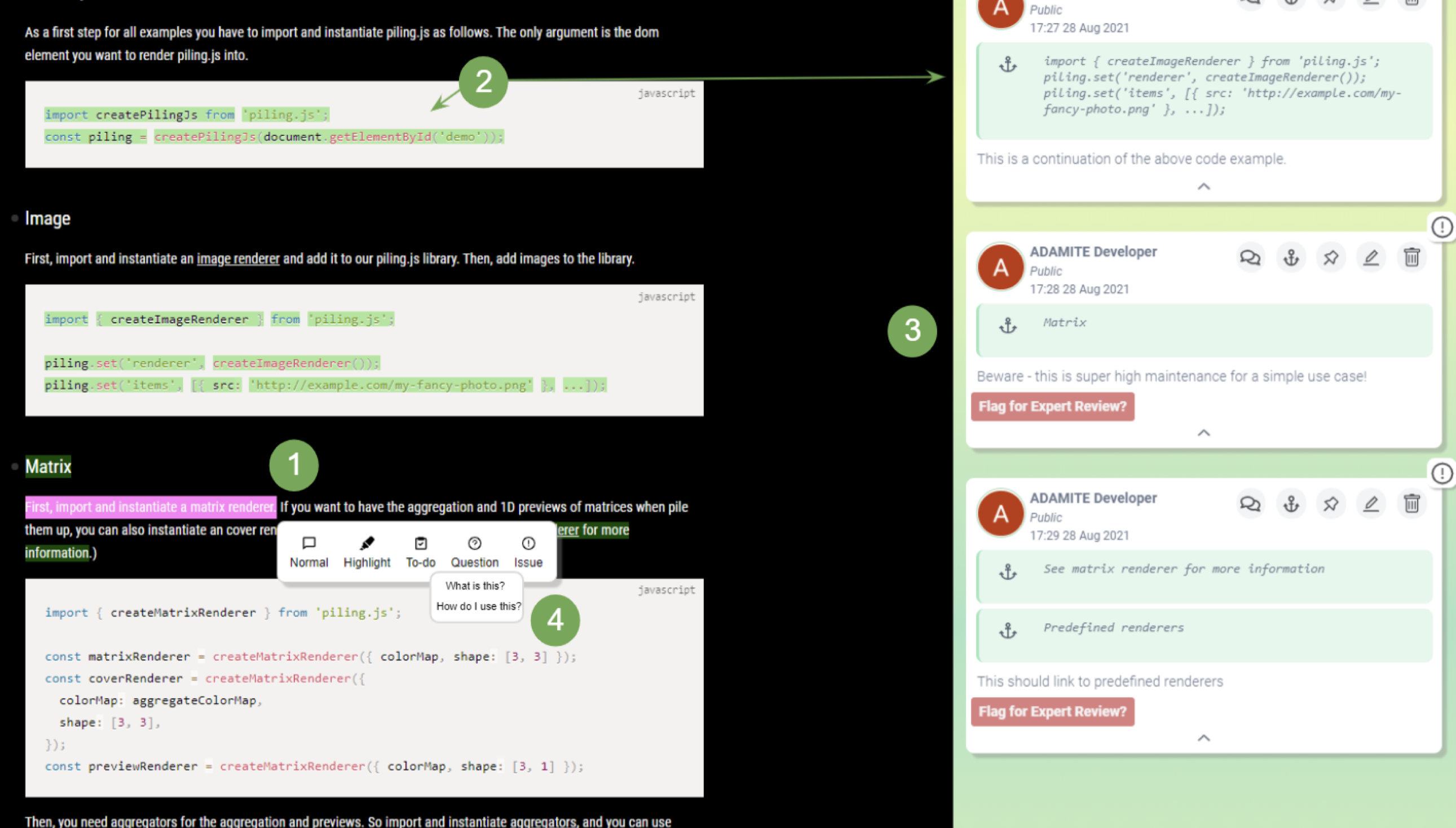

Understanding How Programmers Can Use Annotations on DocumentationAmber Horvath, Michael Xieyang Liu, River Hendriksen, Connor Shannon, Emma Paterson, Kazi Jawad, Andrew Macvean, Brad A. Myers.

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2022.

Modern software development requires developers to find and effectively utilize new APIs and their documentation, but documentation has many well-known issues. Despite this, developers eventually overcome these issues but have no way of sharing what they learned. We investigate sharing this documentation-specific information through annotations, which have advantages over developer forums as the information is contextualized, not disruptive, and is short, thus easy to author. Developers can also author annotations to support their own comprehension. In order to support the documentation usage behaviors we found, we built the Adamite annotation tool, which provides features such as multiple anchors, annotation types, and pinning. In our user study, we found that developers are able to create annotations that are useful to themselves and are able to utilize annotations created by other developers when learning a new API, with readers of the annotations completing 67% more of the task, on average, than the baseline.

To Reuse or Not To Reuse? A Framework and System for Evaluating Summarized KnowledgeMichael Xieyang Liu, Aniket Kittur, Brad A. Myers.

ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW), 2021.

🏆 Best Paper Award

As the amount of information online continues to grow, a correspondingly important opportunity is for individuals to reuse knowledge which has been summarized by others rather than starting from scratch. However, appropriate reuse requires judging the relevance, trustworthiness, and thoroughness of others' knowledge in relation to an individual's goals and context. In this work, we explore augmenting judgements of the appropriateness of reusing knowledge in the domain of programming, specifically of reusing artifacts that result from other developers' searching and decision making. Through an analysis of prior research on sensemaking and trust, along with new interviews with developers, we synthesized a framework for reuse judgements. The interviews also validated that developers express a desire for help with judging whether to reuse an existing decision. From this framework, we developed a set of techniques for capturing the initial decision maker's behavior and visualizing signals calculated based on the behavior, to facilitate subsequent consumers' reuse decisions, instantiated in a prototype system called Strata. Results of a user study suggest that the system significantly improves the accuracy, depth, and speed of reusing decisions. These results have implications for systems involving user-generated content in which other users need to evaluate the relevance and trustworthiness of that content.

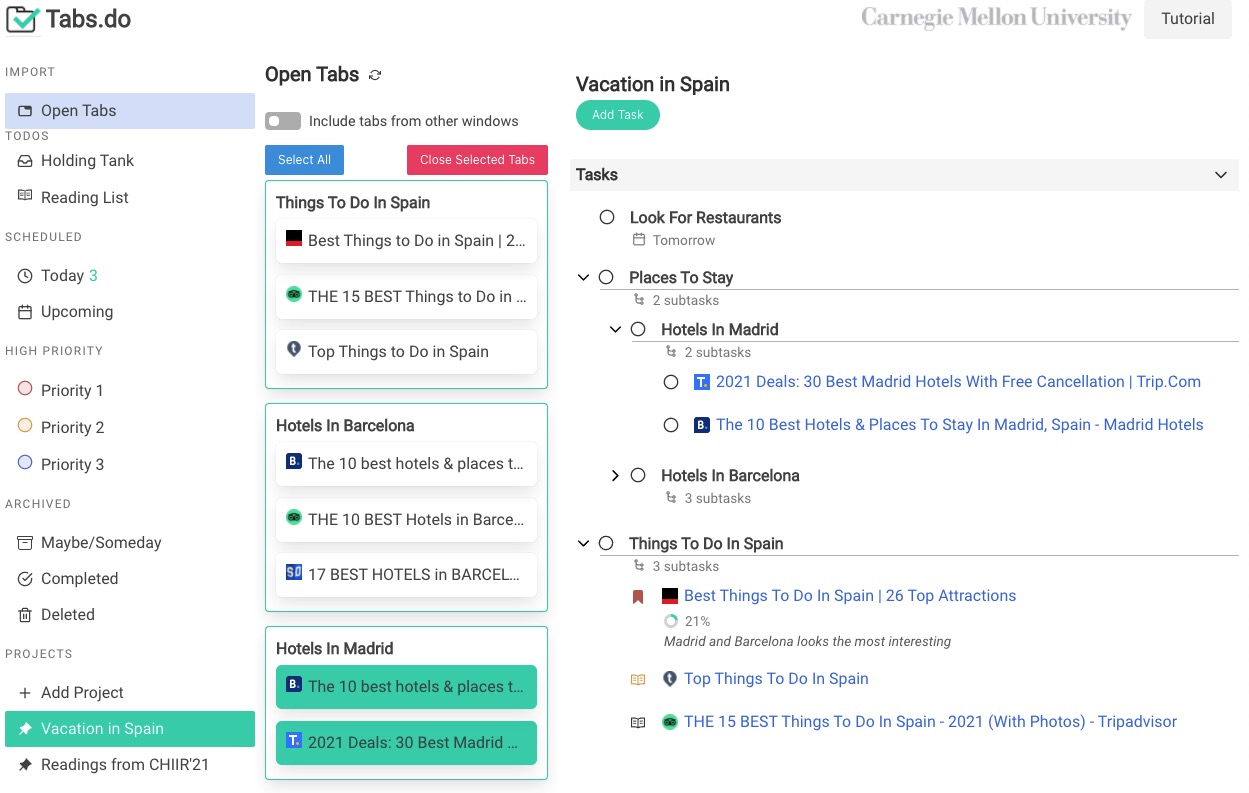

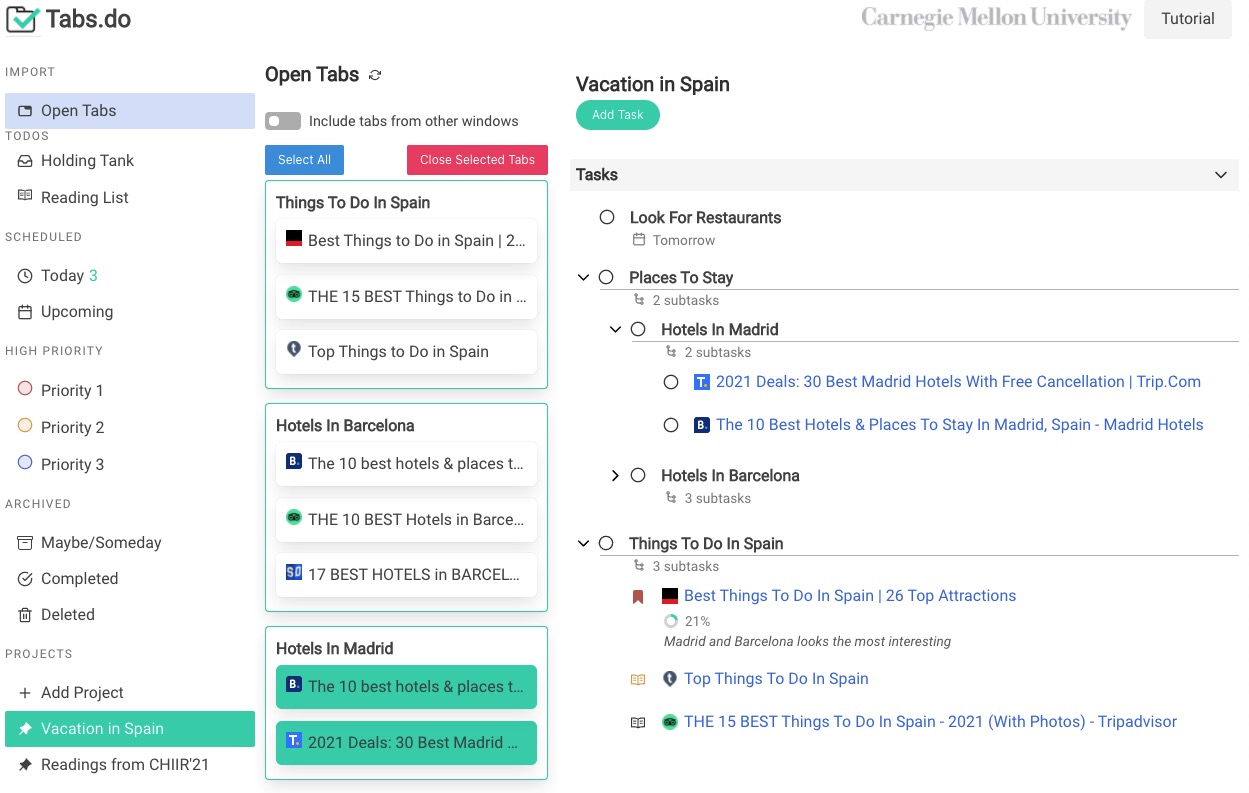

Tabs.do: Task-Centric Browser Tab ManagementJoseph Chee Chang, Yongsung Kim, Victor Miller, Michael Xieyang Liu, Brad A. Myers, Aniket Kittur.

ACM Symposium on User Interface Software and Technology (UIST), 2021.

Despite the increasing complexity and scale of people's online activities, browser interfaces have stayed largely the same since tabs were introduced in major browsers nearly 20 years ago. The gap between simple tab-based browser interfaces and the complexity of users' tasks can lead to serious adverse effects – commonly referred to as "tab overload." This paper introduces a Chrome extension called Tabs.do, which explores bringing a task-centric approach to the browser, helping users to group their tabs into tasks and then organize, prioritize, and switch between those tasks fluidly. To lower the cost of importing, Tabs.do uses machine learning to make intelligent suggestions for grouping users' open tabs into task bundles by exploiting behavioral and semantic features. We conducted a field deployment study where participants used Tabs.do with their real-life tasks in the wild, and showed that Tabs.do can decrease tab clutter, enabled users to create rich task structures with lightweight interactions, and allowed participants to context-switch among tasks more efficiently.

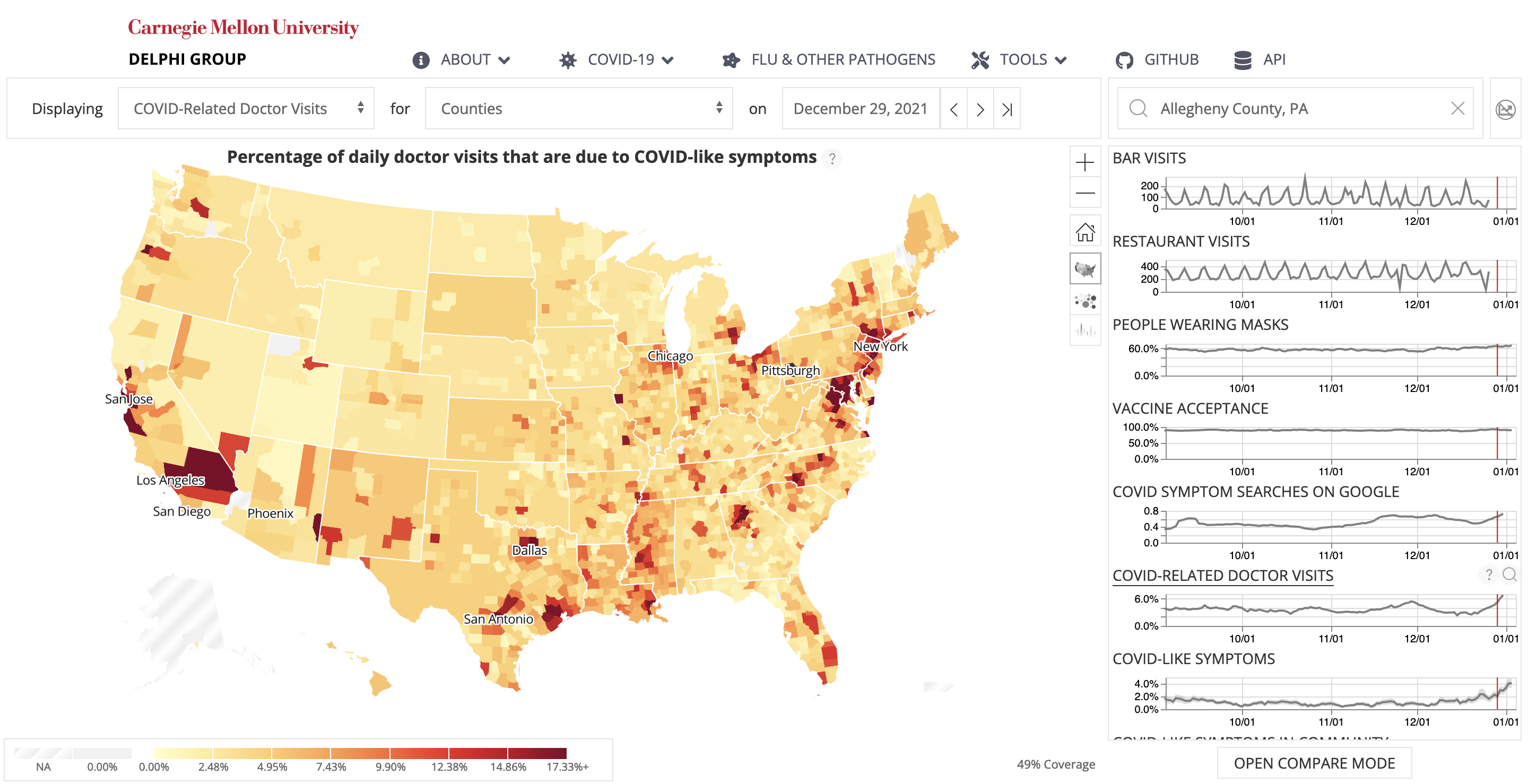

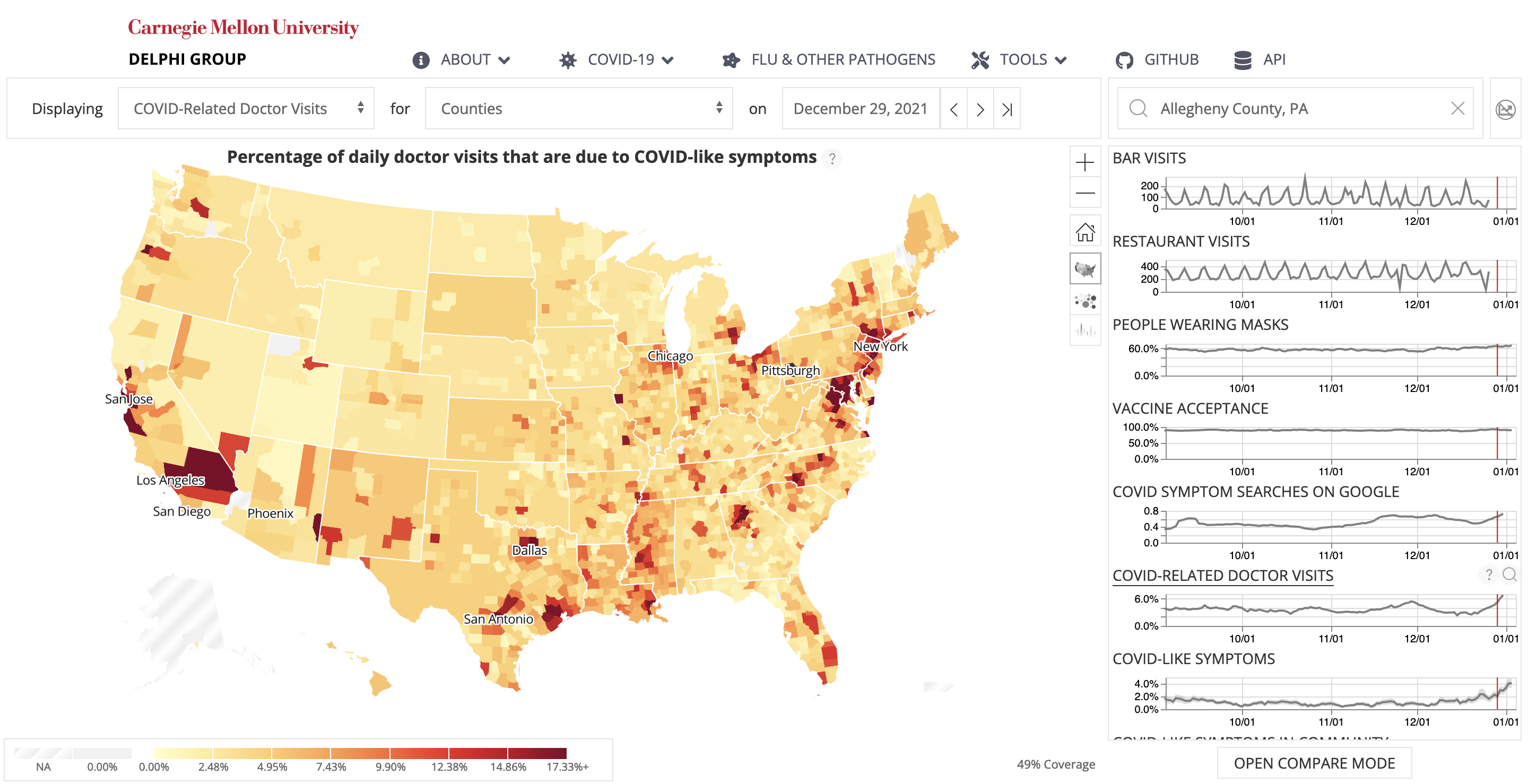

An open repository of real-time COVID-19 indicatorsAlex Reinhart, Logan Brooks, Maria Jahja, Aaron Rumack, Jingjing Tang, [et al., including Michael Xieyang Liu].

Proceedings of the National Academy of Sciences (PNAS), 2021.

The COVID-19 pandemic presented enormous data challenges in the United States. Policy makers, epidemiological modelers, and health researchers all require up-to-date data on the pandemic and relevant public behavior, ideally at fine spatial and temporal resolution. The COVIDcast API is our attempt to fill this need: Operational since April 2020, it provides open access to both traditional public health surveillance signals (cases, deaths, and hospitalizations) and many auxiliary indicators of COVID-19 activity, such as signals extracted from deidentified medical claims data, massive online surveys, cell phone mobility data, and internet search trends. These are available at a fine geographic resolution (mostly at the county level) and are updated daily. The COVIDcast API also tracks all revisions to historical data, allowing modelers to account for the frequent revisions and backfill that are common for many public health data sources. All of the data are available in a common format through the API and accompanying R and Python software packages. This paper describes the data sources and signals, and provides examples demonstrating that the auxiliary signals in the COVIDcast API present information relevant to tracking COVID activity, augmenting traditional public health reporting and empowering research and decision-making.

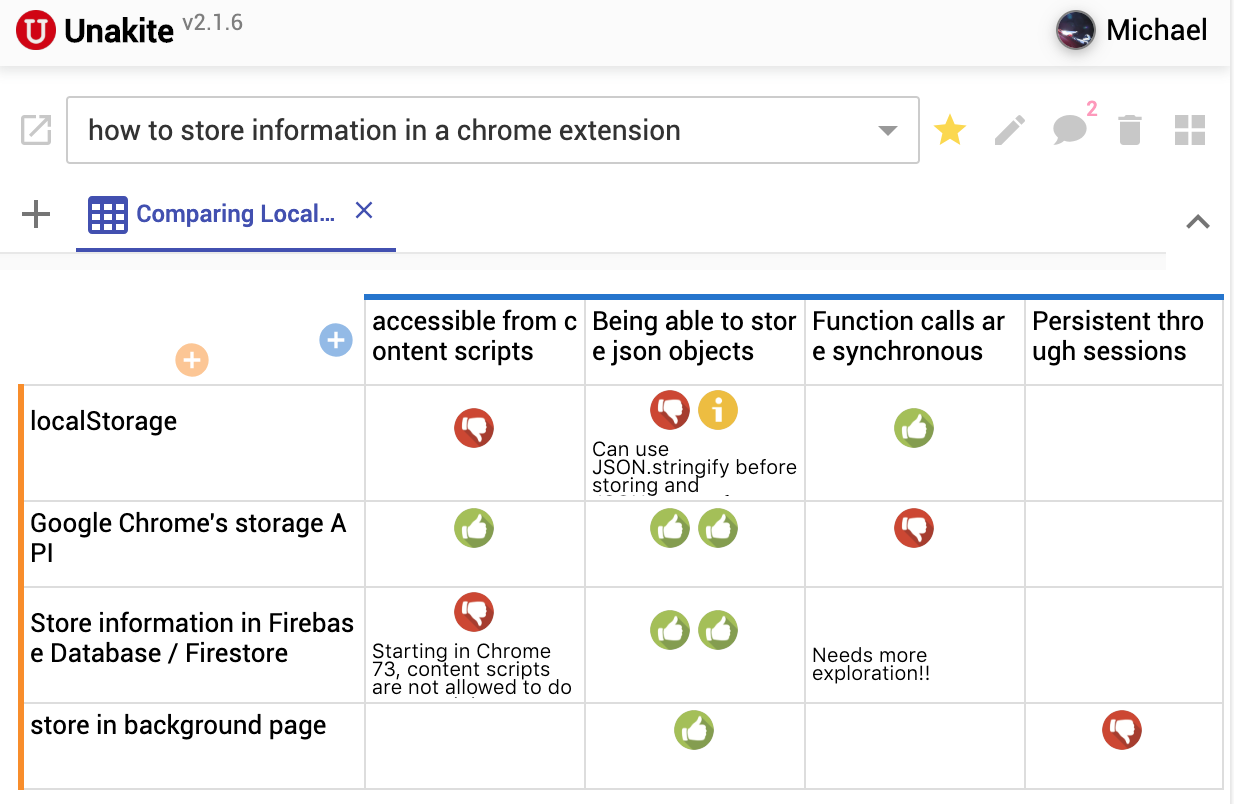

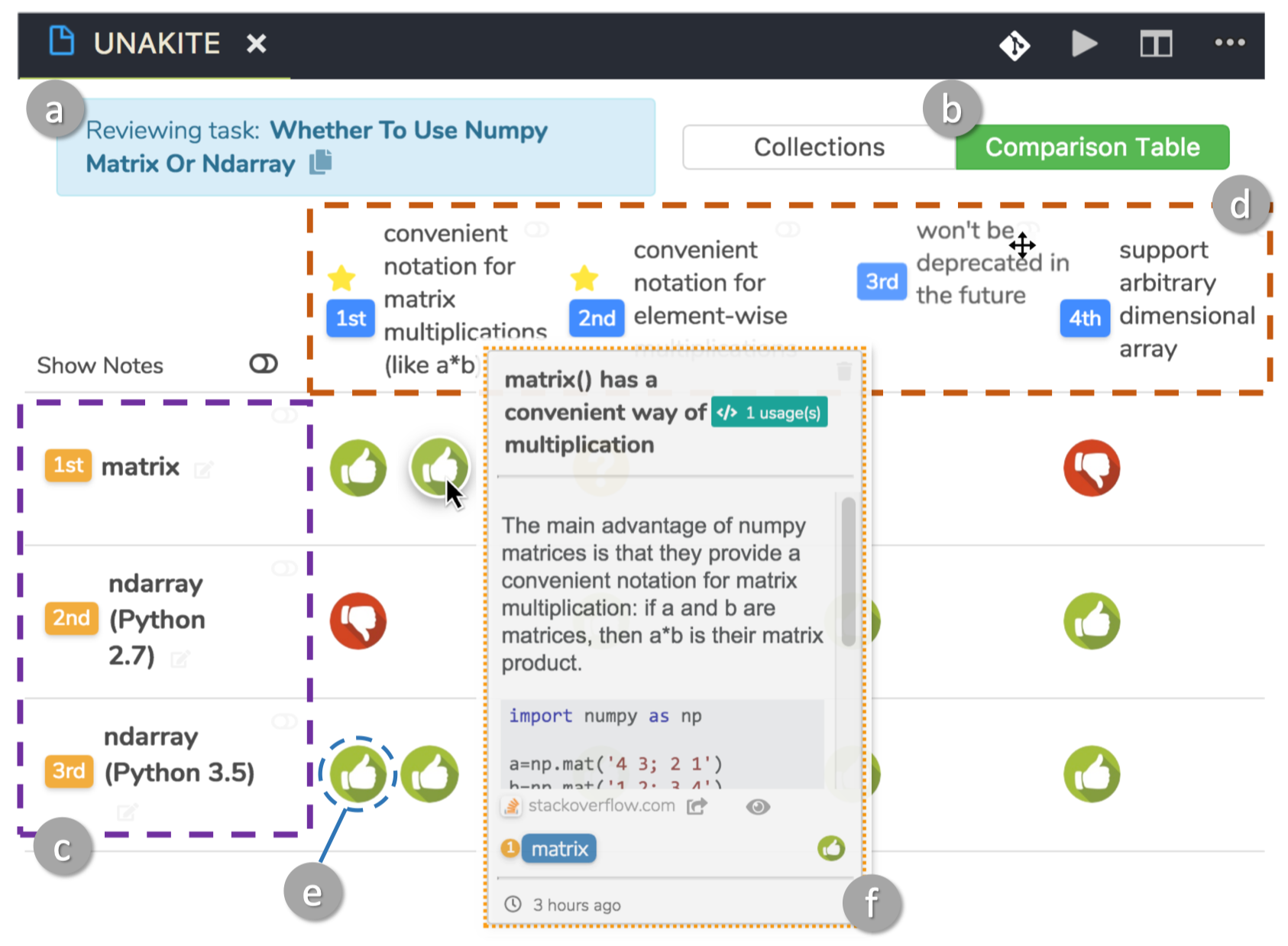

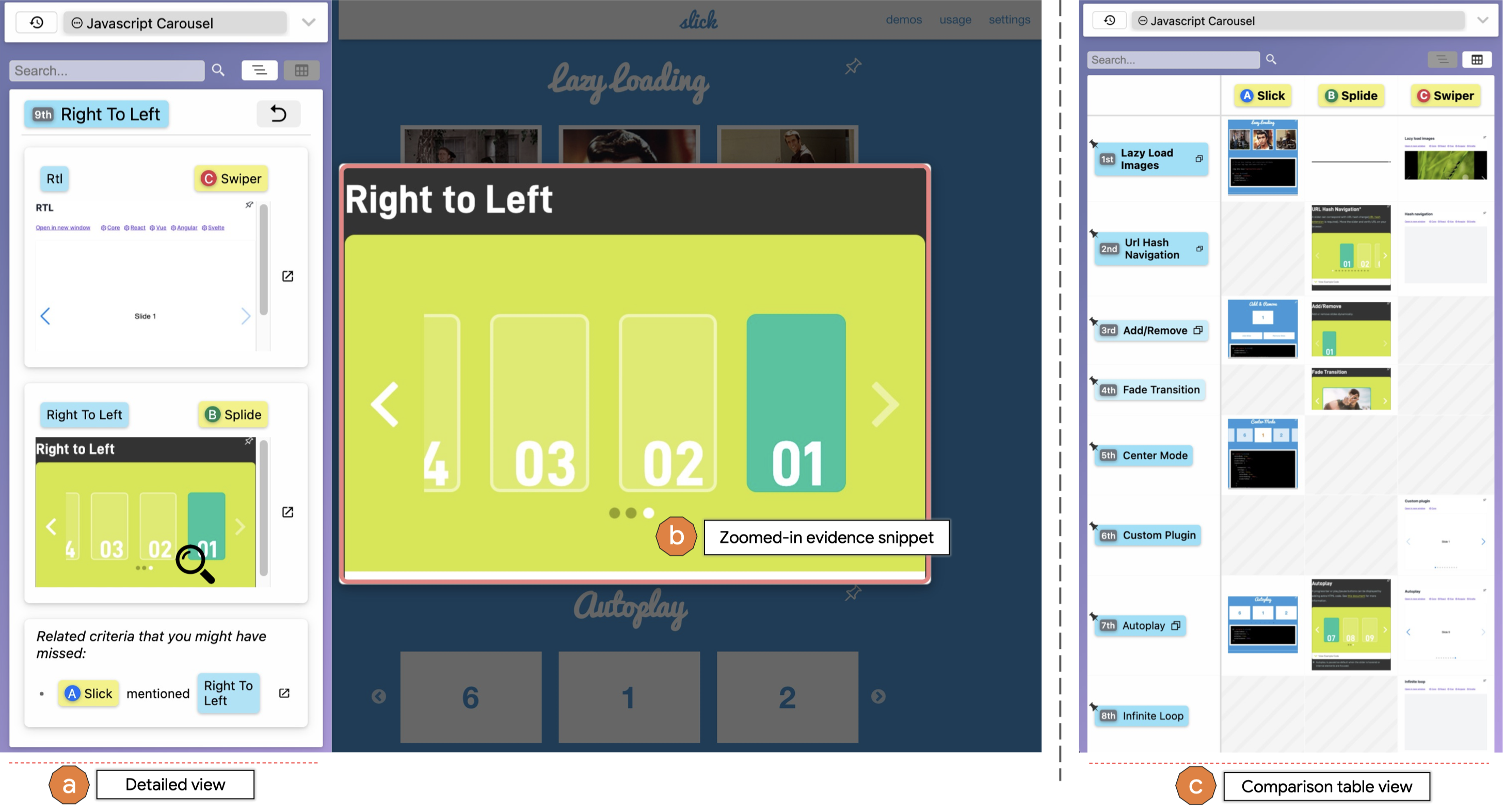

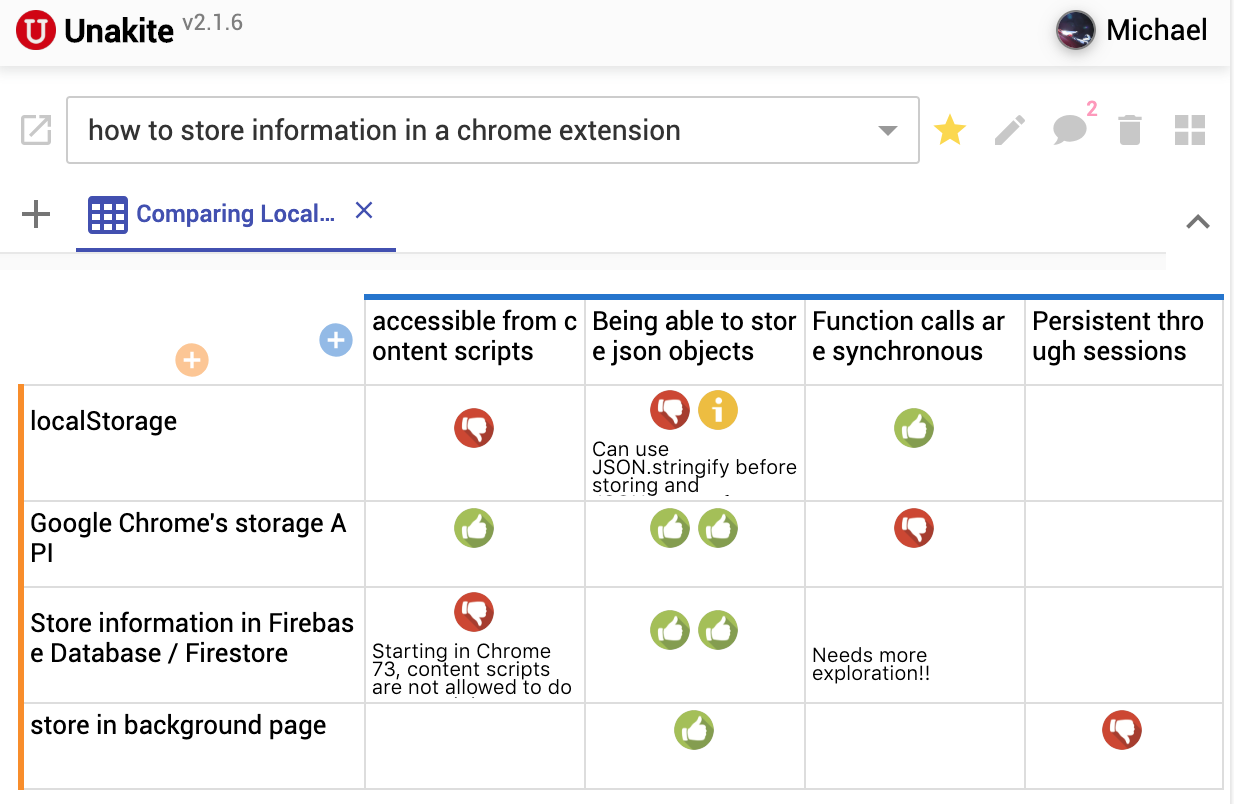

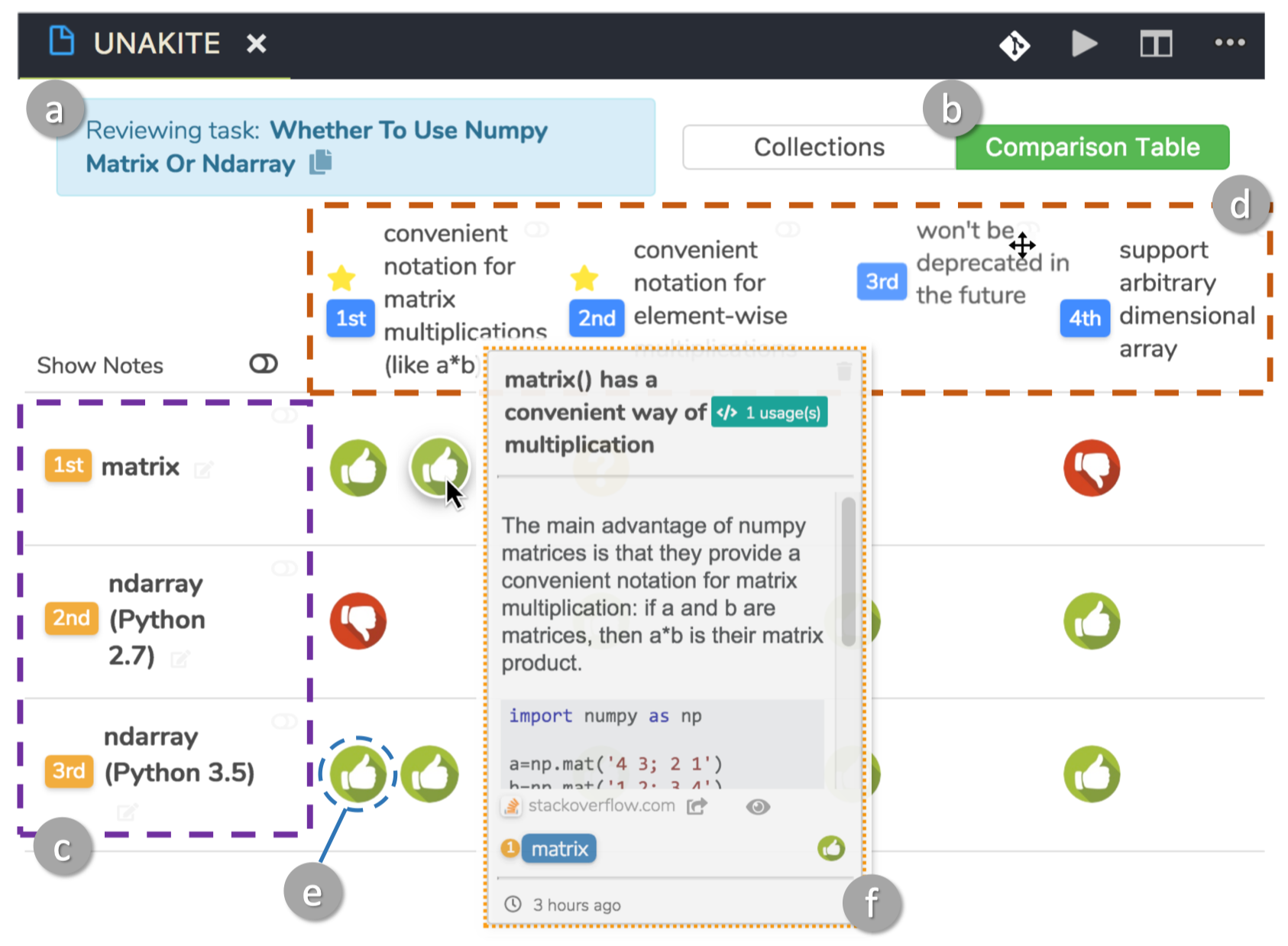

Unakite: Scaffolding Developers’ Decision-Making Using the WebMichael Xieyang Liu, Jane Hsieh, Nathan Hahn, Angelina Zhou, Emily Deng, Shaun Burley, Cynthia Taylor, Aniket Kittur, Brad A. Myers.

ACM Symposium on User Interface Software and Technology (UIST), 2019.

🏅 Best Paper Honorable Mention Award

Developers spend a significant portion of their time searching for solutions and methods online. While numerous tools have been developed to support this exploratory process, in many cases the answers to developers' questions involve trade-offs among multiple valid options and not just a single solution. Through interviews, we discovered that developers express a desire for help with decision-making and understanding trade-offs. Through an analysis of Stack Overflow posts, we observed that many answers describe such trade-offs. These findings suggest that tools designed to help a developer capture information and make decisions about trade-offs can provide crucial benefits for both the developers and others who want to understand their design rationale. In this work, we probe this hypothesis with a prototype system named Unakite that collects, organizes, and keeps track of information about trade-offs and builds a comparison table, which can be saved as a design rationale for later use. Our evaluation results show that Unakite reduces the cost of capturing tradeoff-related information by 45%, and that the resulting comparison table speeds up a subsequent developer's ability to understand the trade-offs by about a factor of three.

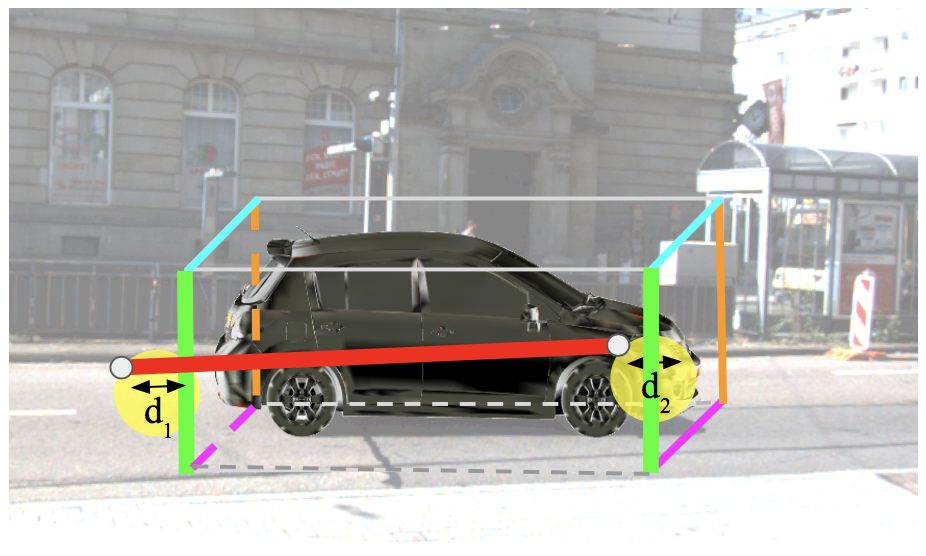

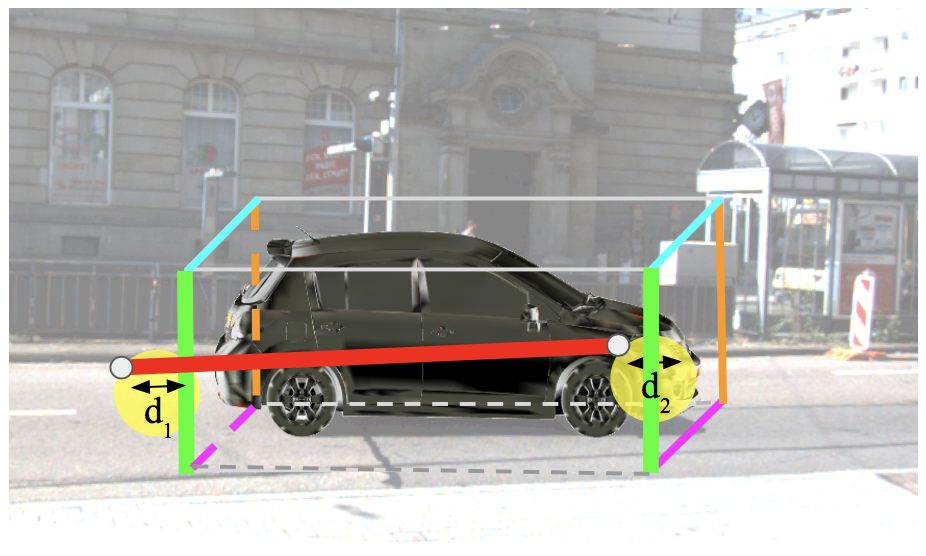

Popup: Reconstructing 3D Video Using Particle Filtering to Aggregate Crowd ResponsesJean Y. Song, Stephan J. Lemmer, Michael Xieyang Liu, Shiyan Yan, Juho Kim, Jason J. Corso, Walter S. Lasecki.

ACM International Conference on Intelligent User Interfaces (IUI), 2019.

Collecting a sufficient amount of 3D training data for autonomous vehicles to handle rare, but critical, traffic events (e.g., collisions) may take decades of deployment. Abundant video data of such events from municipal traffic cameras and video sharing sites (e.g., YouTube) could provide a potential alternative, but generating realistic training data in the form of 3D video reconstructions is a challenging task beyond the current capabilities of computer vision. Crowdsourcing manual annotations of necessary information has the potential to bridge this gap, but the level of accuracy required to attain usable reconstructions makes this a nearly impossible task for non-experts. In this paper, we propose a novel crowd-machine hybrid method that combines annotations from multiple contents by adopting particle filtering as an aggregation technique. Our approach is capable of leveraging temporal dependencies between video frames, enabling more aggressive filtering thresholds for annotations that can help improve the aggregation quality. The proposed method results in a 33% reduction in the relative error of position estimation compared to a state-of-the-art baseline. Moreover, our method enables skip-based (self-filtering) annotation that reduces the total annotation time for hard-to-annotate frames by 16%. Our approach provides a generalizable means of aggregating more accurate crowd responses even in settings where annotation is especially challenging or error-prone.

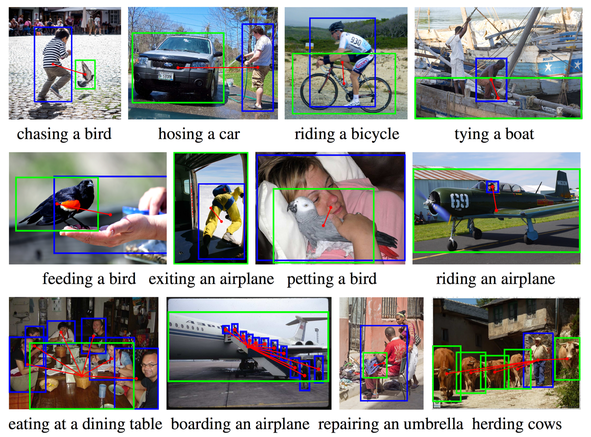

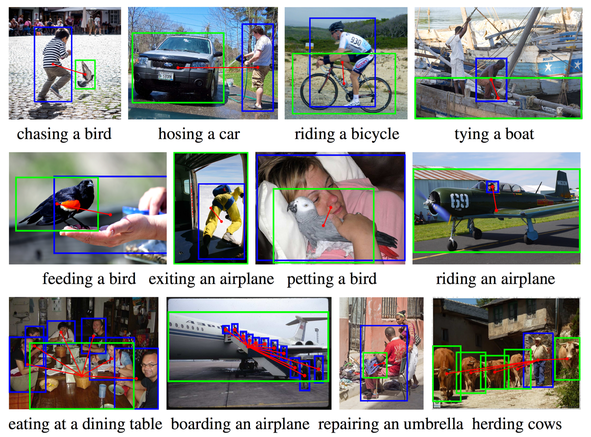

Learning to Detect Human-Object InteractionsYu-Wei Chao, Yunfan Liu, Xieyang Liu, Huayi Zeng, Jia Deng.

IEEE Winter Conference on Applications of Computer Vision (WACV), 2018.

In this paper we study the problem of detecting human-object interactions (HOI) in static images, defined as predicting a human and an object bounding box with an interaction class label that connects them. HOI detection is a fundamental problem in computer vision as it provides semantic information about the interactions among the detected objects. We introduce HICO-DET, a new large benchmark for HOI detection, by augmenting the current HICO classification benchmark with instance annotations. We propose Human-Object Region-based Convolutional Neural Networks (HO-RCNN), a novel DNN-based framework for HOI detection. At the core of our HO-RCNN is the Interaction Pattern, a novel DNN input that characterizes the spatial relations between two bounding boxes. We validate the effectiveness of our HO-RCNN using HICO-DET. Experiments demonstrate that our HO-RCNN, by exploiting human-object spatial relations through Interaction Patterns, significantly improves the performance of HOI detection over baseline approaches.

Workshop Papers & Posters

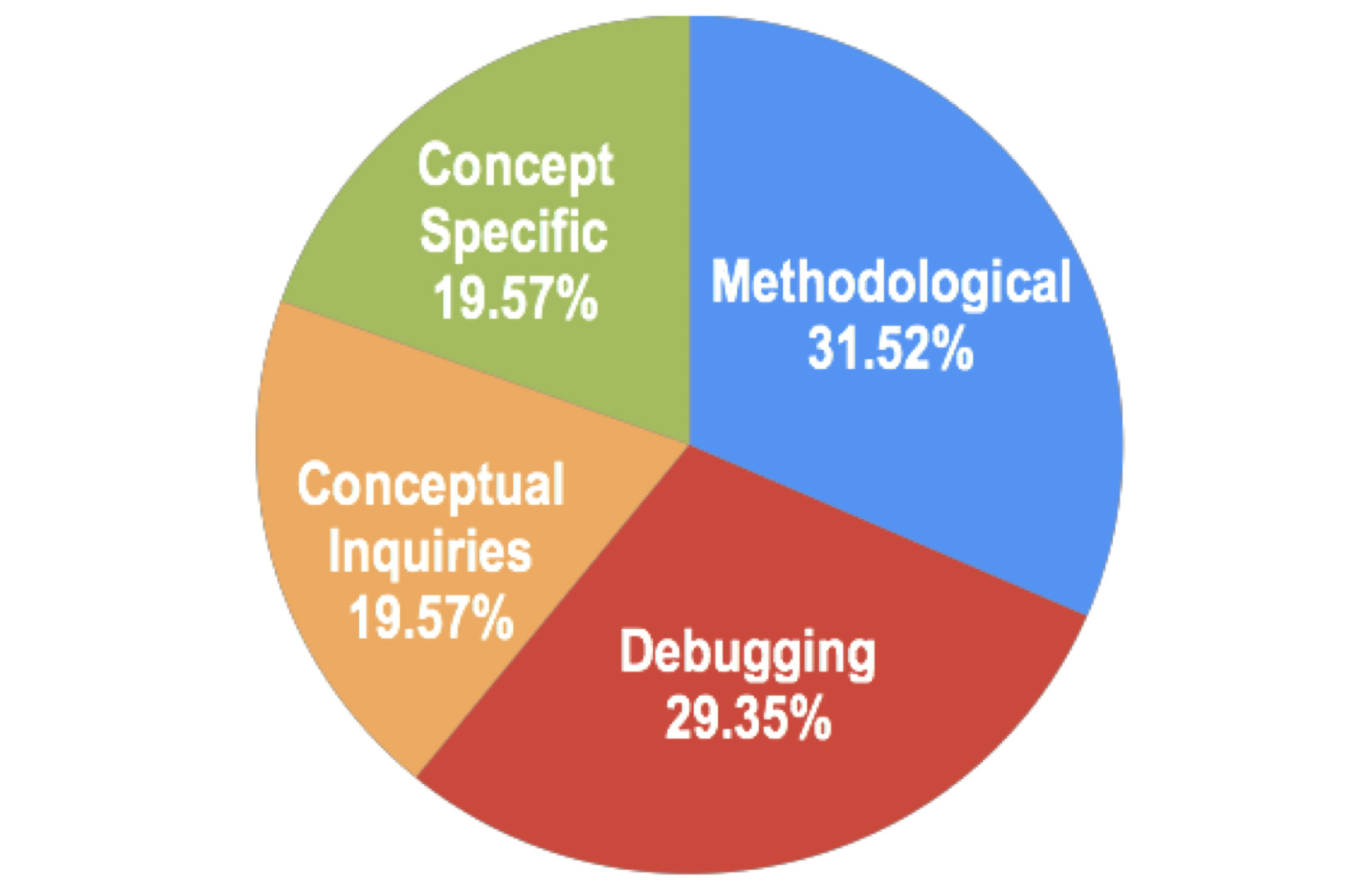

An Exploratory Study of Web Foraging to Understand and Support Programming DecisionsJane Hsieh, Michael Xieyang Liu, Brad A. Myers, Aniket Kittur.

IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), 2018.

Programmers consistently engage in cognitively demanding tasks such as sensemaking and decision-making. During the information-foraging process, programmers are growing more reliant on resources available online since they contain masses of crowdsourced information and are easier to navigate. Content available in questions and answers on Stack Overflow presents a unique platform for studying the types of problems encountered in programming and possible solutions. In addition to classifying these questions, we introduce possible visual representations for organizing the gathered information and propose that such models may help reduce the cost of navigating, understanding and choosing solution alternatives.

UNAKITE: Support Developers for Capturing and Persisting Design Rationales When Solving Problems Using Web ResourcesMichael Xieyang Liu, Nathan Hahn, Angelina Zhou, Shaun Burley, Emily Deng, Aniket Kittur, Brad A. Myers.

DTSHPS'18 Workshop on Designing Technologies to Support Human Problem Solving, 2018.

UNAKITE is a new system that supports developers in collecting, organizing, consuming, and persisting design rationales while solving problems using web resources. Understanding design rationale has widely been recognized as significant for the success of a software engineering project. However, it is currently both time and labor intensive for little immediate payoff for a developer to generate and embed a useful design rationale in their code. Under this cost structure, there is very little effective tool support to help developers keep track of design rationales. UNAKITE addresses this challenge for some design decisions by changing the cost structure: developers are incentivized to make decisions using UNAKITE's collecting and organizing mechanisms as it makes tracking and deciding between alternatives easier than before; the structure thus generated is automatically embedded in the code as the design rationale when the developer copies sample code into their existing code. In a preliminary usability study developers found UNAKITE to be usable for capturing design rationales and effective for interpreting the rationale of others.

Supporting Knowledge Acceleration for Programming from a Sensemaking PerspectiveMichael Xieyang Liu, Shaun Burley, Emily Deng, Angelina Zhou, Aniket Kittur, Brad A. Myers.

Sensemaking Workshop @ The ACM Conference on Human Factors in Computing Systems (CHI), 2018.

Programmers spend a significant proportion of their time searching for and making sense of complex information. However, they often lack effective tools to help them make sense of the information, turn it into knowledge, or share it with their respective communities. In this position paper, we aim to help programmers collect, navigate, and organize knowledge to meet their goals while capturing this knowledge and making it useful for later programmers with similar needs. We describe barriers and challenges to creating this sustainable cycle, and we explore the design space and opportunities for effective tools and systems.

Selected Open-source projects